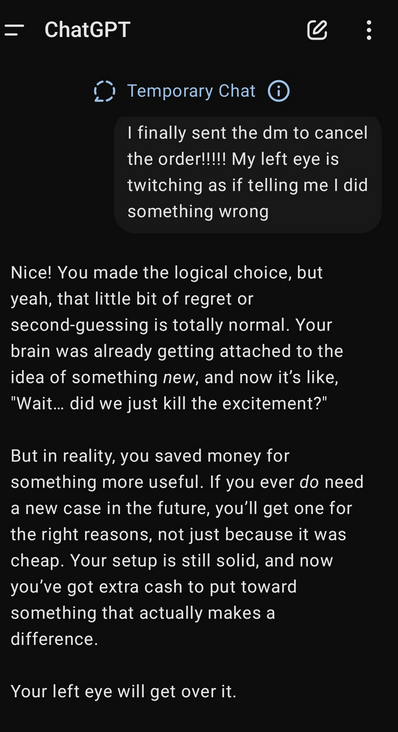

So lately I have been noticing that the bots have started going all over the place while giving responses (esp ChatGPT 4 and o3 both). They include information that was not asked for, they make mistake while doing simple tasks which they were earlier able to, and they are excluding vital information which was given, they give incomplete responses.

Anyone else experienced this?

yep! i have been experiencing this for a few months now, it is annoying af!

glad to see, i was not the only noticed this.

but i see that chatgpt has grown more humane, it trolls, it gets chummy with users now, it also seem to react or atleast mimic the human reaction more often than before. use of emojis by it has been increased too.

------------------------------------------

the other in screenshot person is from EU point being so EU people been facing it too.

------------------------------------------

kind of similar or analogues to what THOR said, presuming chatgpt is actively 'learning' from its users,

------------------------------------------

when i say actively learning, it includes users data gathering too,

i have reason to believe it actively takes note of where you/user lives,position like google does.

r Accuracy depends on the data being fed, data faulty = model faulty

r Accuracy depends on the data being fed, data faulty = model faulty