Does anyone here use cloud VMs with GPUs to run AI workloads?

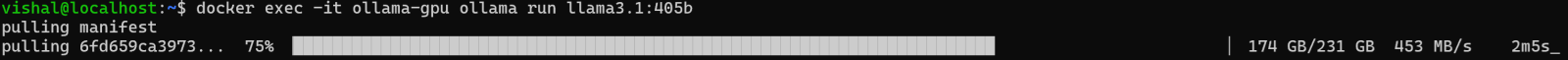

I'm trying to run the new Facebook/Meta LLM called Llama 3.1 and its 405b sized model using ollama.com which in GPU mode requires at least 230 GB of total GPU memory.

I have been trying to launch VMs in various cloud providers, like Linode, TensorDock and the big 3 - AWS, GCP and Azure but in my personal accounts (not corporate) and these mofos are throwing nakhras by making me request for quota allocation to allow launching of such VMs (with many vCPUs like 48 or 64 and lots of RAM and multiple GPUs to meet the VRAM requirements) ...

Any suggestions? I just want to launch such a VM for a couple of hours to run the OLLAMA docker image with the LLAMA 3.1 model then delete it...

I'm trying to run the new Facebook/Meta LLM called Llama 3.1 and its 405b sized model using ollama.com which in GPU mode requires at least 230 GB of total GPU memory.

I have been trying to launch VMs in various cloud providers, like Linode, TensorDock and the big 3 - AWS, GCP and Azure but in my personal accounts (not corporate) and these mofos are throwing nakhras by making me request for quota allocation to allow launching of such VMs (with many vCPUs like 48 or 64 and lots of RAM and multiple GPUs to meet the VRAM requirements) ...

Any suggestions? I just want to launch such a VM for a couple of hours to run the OLLAMA docker image with the LLAMA 3.1 model then delete it...