May I ask what case you are using to fit the four GPUs ?train my models on all 4 gpus

Would Corsair Rm1000x be enough for 2x3080ti and 3090?

- Thread starter draglord

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

I don't know how much time it would take but if you are going this much gpu i would say you should move to ryzen trx platform or intel xeon cpus with good mobos that would give more pcie slots with much more lanes.

Also wouldn't it be good if you could sell of one 3080ti and instead of buying another 3060 use that money and get another 3090.

Also wouldn't it be good if you could sell of one 3080ti and instead of buying another 3060 use that money and get another 3090.

HiMay I ask what case you are using to fit the four GPUs ?

I have 3 gpus as of right now, and i don't have a case. They're open

I'm not sure if it would be good to sell the 3080 ti they're almost as powerful as 3090 just want to know if 3060 would cause a bottleneckI don't know how much time it would take but if you are going this much gpu i would say you should move to ryzen trx platform or intel xeon cpus with good mobos that would give more pcie slots with much more lanes.

Also wouldn't it be good if you could sell of one 3080ti and instead of buying another 3060 use that money and get another 3090.

What case are you looking at? i would suggest phanteks enthoo pro 2/719 because it can house 2 psu and so you can run 3 gpu's but i doubt there are any consumer mobos that can actually provide you the required pcie lanes and clearance for those 3 massive gpu's and still run at full bandwith.. i think only Msi godlike x670e can do x8x8x8 and its expensive, so like the guy above said, you should look into hedpc boards and processors. im also searching for the best solution in budget for mlHi

I have 3 gpus as of right now, and i don't have a case. They're open

I'm not sure if it would be good to sell the 3080 ti they're almost as powerful as 3090 just want to know if 3060 would cause a bottleneck

Alucard1729

Forerunner

I have bought a 3090 and now I'm in a fix. If i buy a 3060 12 gb and train my models on all 4 gpus (3090 + 2x3080ti + 3060 ) would the 3060 cause a bottleneck?

I gave you the idea to buy Rtx 3060 only if you were going to sell the 3080ti and buy 2x 3090 then an additional rtx 3060 would help.

Parallelization would be a pain early on in your learning. Instead having 2x rtx 3090 used for training and tuning while inferencing on the spare rtx 3060 (before deploying the model) be better option.

First of all you have more than enough to work with. Rather than buying more because of this opportune time of the GPU prices being low, spend that on the motherboard that dictates a build.

I had earlier presented that idea if you were deep into this. For Machine vision, LLMs, RL, or anything that would come out can be easily run with the present set of GPUs you have at hand.

I had started with laptop gtx 1060 and felt the lack of horsepower when implementing models from SOTA papers around 2020. That is when I wanted to build a workstation and did so in 2021 (actually the reason I discovered this site XD). Between that time I used colab among other things, looked into numerous ways to utilize multi-GPU setups (came across Dali) and also started with cuda programming.

My goal was to learn how to scale my projects when needed.

As you see most of my time didn't go around cherry picking hardware for my purpose (same goes for my colleagues). It was around 2021 that a bloated laptop battery sent me around building my own workstation.

Coming to my hardware selection, I didn't want to waste anytime with hardware issue, so went with a platform I thought had matured enough ie. Ryzen with x570 board (Aorus pro wifi).

I bought two rtx 3090 and plugged in a rx 550 later on. Installed Debian and its been going rock solid (with fourdot fans ofc XD).

Installed 4 SSDs (2tb each) for project, application, etc. 2x big HDD for archival storage.

The reason for going with this setup were

1) Aorus pro was the lowest priced x570 board with dual x8 pcie setup

2) In case GPU addressing was not easy having nvlink at disposal would be handy (glad this wasn't necessary)

If I had to build again then I would definitely go epyc based platform but that's more so because of my current use case, not entirely for AI/ML.

Getting a 2x RTX 3090 was a turning point for me beacuse with around 48gb I could fit more models that were coming out.

Bandwidth is important (almost everything). People have used multiple GPUs with risers similar to you with varying degree of success. Having atleast x4 lanes for each GPU would be good. I didn't want to spend on those oculinks, thus went with a motherboard that provided me enough bifurcation of lanes.

I always avoided these risers because when you're learning and practicing on the hardware it's very crude early on and more time is spend debugging and you wouldn't want to spend anymore on the inconsistencies of the results your model output if a riser say goes kaput. Also there's another point of how these new GPUs address memory with resizable bar enabled and if that is negatively affected with limiting the lanes (Also seen people comment their rtx 3080 in riser work similar to 3060 in pcie slot XD).

I just didn't want the hassle and spend that time on learning and testing.

If you have any further queries pm me.

Last edited:

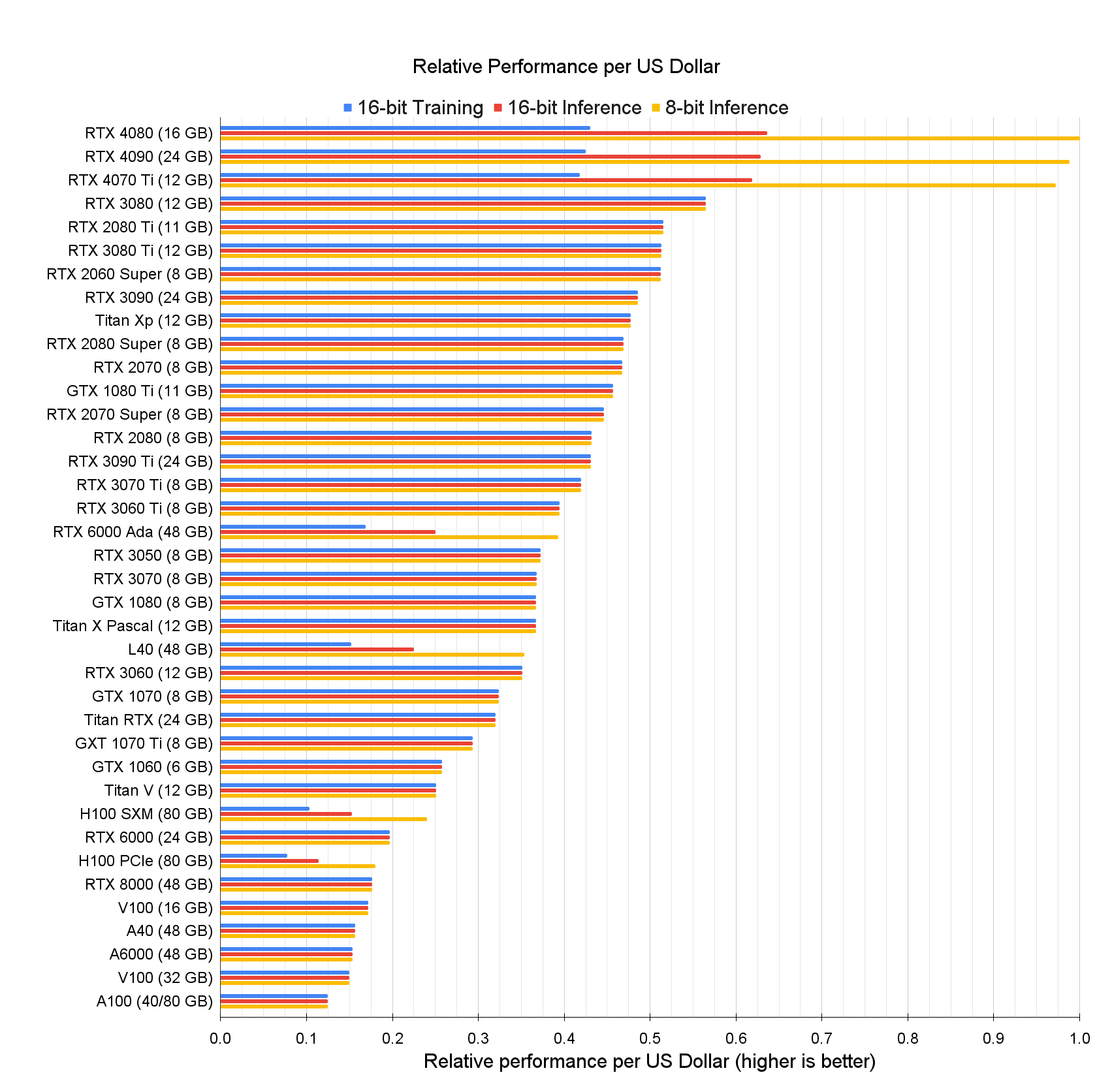

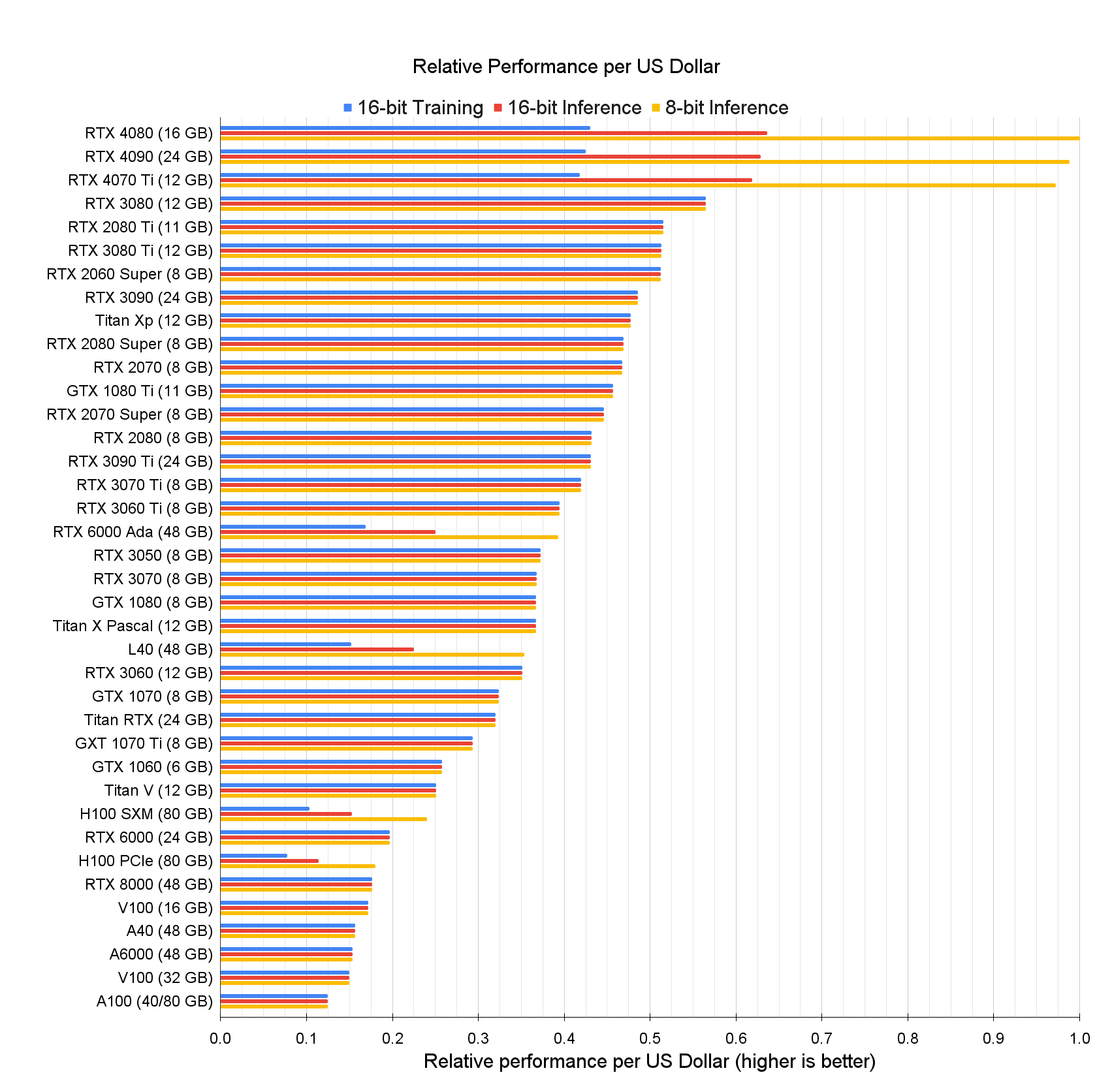

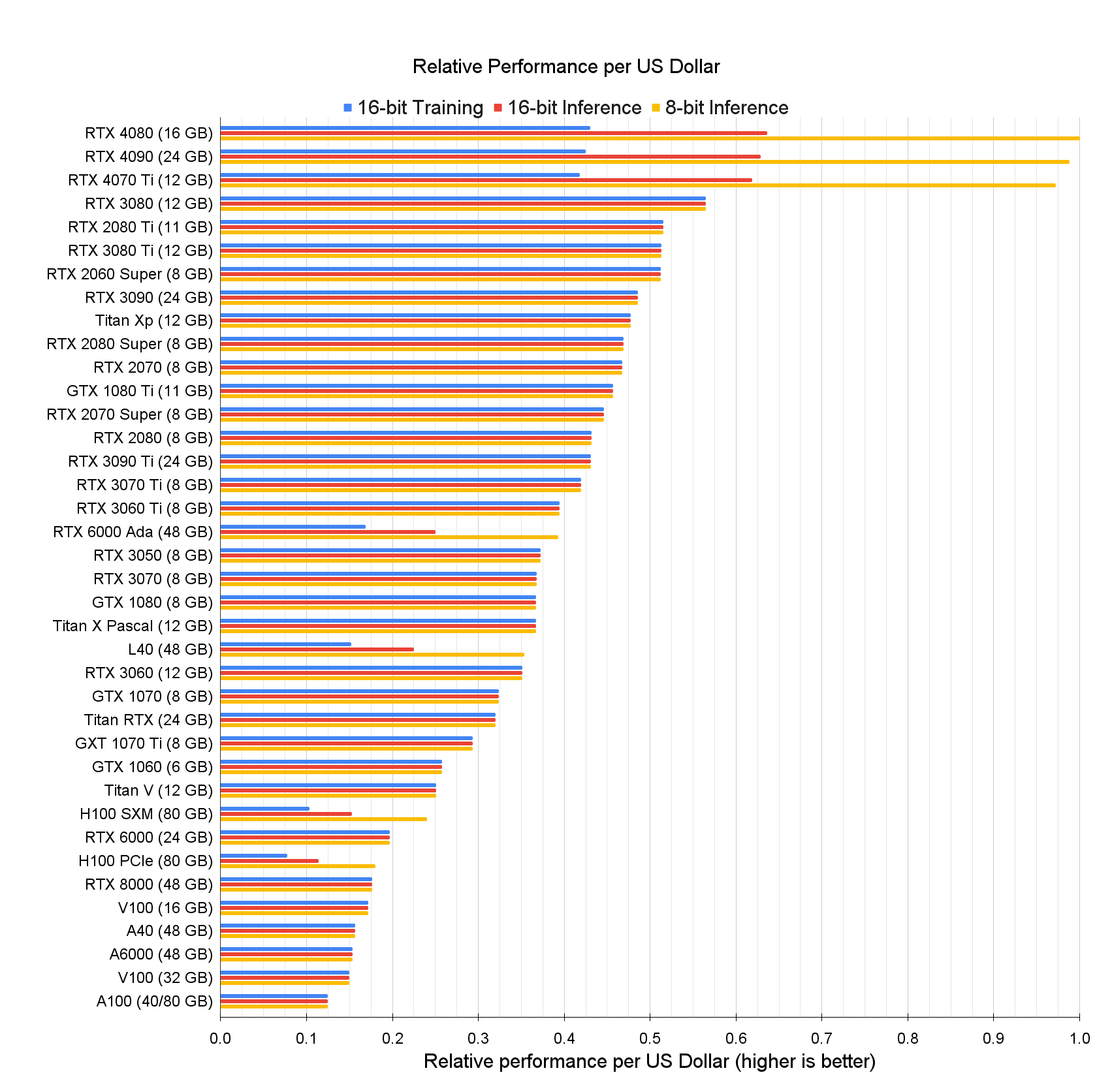

I feel this is a must read for anyone looking to buy hardware for this field -

timdettmers.com

timdettmers.com

The Best GPUs for Deep Learning in 2023 — An In-depth Analysis

Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

timdettmers.com

timdettmers.com

Alucard1729

Forerunner

Glad to see this updated for new platforms.I feel this is a must read for anyone looking to buy hardware for this field -

The Best GPUs for Deep Learning in 2023 — An In-depth Analysis

Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.timdettmers.com

Great thanks for the detailed answer.Glad to see this updated for new platforms.

- Status

- Not open for further replies.