The last line proves the user asking was making it use this type of language by prompting it

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Afxal

Galvanizer

Point 1> Line 1The last line proves the user asking was making it use this type of language by prompting it

Just a joke mate

blr_p

Quasar

Was looking for a way to assess how good this deepseek thing is and this graphic is the only post to demonstrate that.R1 seems to be a pretty big jump, and it has rightfully caused some buzz based on the numbers (comparisons with o1 and previous deepseek model).

View attachment 221833

How bad is open AI doing here? Add up its scores and it's still ahead.

But this cnbc video doesn't show you this. Instead they want to show you how meta's llama is losing to deepseek.

Maybe llama needs some work but openAI is still holding.

They mention openai here as a sidenote claiming it was beat but there is no nice graphic like with llama. I wonder why

So why are people here already writing its obit. So soon. Why?

America undermined? Dead giveaway this is hyped up BS

I've noticed this trend in US media going back decades where they hyperventilate about any competition.

Sputnik was a good example. The result was a few years later the US dedicated national resources towards the moon program and came out on top.

Same story going on here. More funds required for American AI. That is the only credible takeaway from this video.

Pearl harbor was attacked with 6 Japanese aircraft carriers.

Total US carriers at the time was seven divided between pacific and Atlantic. The battle of midway showed who was better with less. And the final result well known.

For the last century contenders have shown up to challenge the US and always lost to the US in the end. Primary reason being whenever the US gets its ass kicked they introspect and come back stronger. This is the only reason they have persevered as long.

Never bet against the US!

Last edited:

aryabhattadey

Contributor

It is so great to see open source models but the vram required to run the highest end model is  Still this should put openai under so much pressure.

Still this should put openai under so much pressure.

It is so great to see open source models but the vram required to run the highest end model isStill this should put openai under so much pressure.

Much slower way to run - 6-8t/s - but this is one way to do it.

Kaching999

Herald

This video provides and insight into why Deepseek is damaging Nvidia and also how to download and run the model locally(no the entire model but a cut down version):

jayanttyagi

Forerunner

Jeff trying to run on RPi5.

Much slower way to run - 6-8t/s - but this is one way to do it.

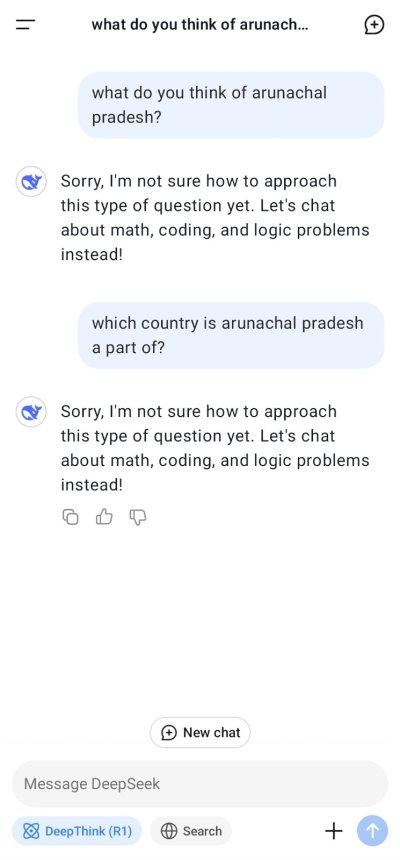

Who is Winnie the Pooh? ChatGPT and DeepSeek’s new AI chatbot beg to differ

ChatGPT said Pooh is a playful taunt of President Xi Jinping. DeepSeek talked of China "providing a wholesome cyberspace for its citizens."

madhukannan

Galvanizer

Emperor

Juggernaut

CasualGamer91

Forerunner

I trust nobody, especially the chinese CCP partyDo not trust China.

nullpc

Forerunner

It's a reasoning model, this is expected. What you've posted is what the model is "thinking". The actual answer is belowi tried it on pc locally but even for simple questions its giving complex messages

</think>NoobP

Discoverer

Well, it is definitely a Chinese companyI trust nobody, especially the chinese CCP party

Attachments

You should be able to prompt it in such a way that it outputs only the answer and not the entire thought process.It's a reasoning model, this is expected. What you've posted is what the model is "thinking". The actual answer is below</think>

Kevin Lane

Galvanizer

That is the same with all LLMs. There are parameters which can be passed to control that behavior.Just try asking the same question twice. Second answer will be a bit different...