The thing to understand is how the frames are generated to begin with. Frame generation uses interpolation, which is a fancy way of saying "guessing". Interpolation in any form reduces quality of the rendered frame. A frame generated by the game engine is significantly different from the frame generated by the driver, hence it always looks worse and because the driver has no way to respond to game input, you get fairly severe lag.

With a 40 series card the difference is already quite noticeable and severe even if the native framerate is high enough. It can cause severe motion sickness as the movement on screen is disjointed from your actual input (it does for me at least). I have three 40 series cards (90, TiS and 60) and all exhibit the same issues (in Hogwarts Legacy, for example, I find it unusable). In slower paced games it's acceptable but not needed if the game is well-optimised.

One of the earliest uses of interpolation was its use for digital audio, and used to render output samples that were not captured during digitisation, but were needed to complete the actual waveform. The method was the same and early versions of digital audio were quite poor compared to their analog counterparts. FG is no different in its intent, but much more lacking because the dataset is larger, and the variables are more. If it is tightly integrated into the game engine it can work, but it's usually closer to the latter half of the pipeline (at least the first FG was).

Now that they are moving to 4x FG and higher TOPS we can also expect better LLM and media generation once Torch gets updated for the new cards. However the smaller framebuffers will mean lots of models will not fit fully in VRAM, which is why for the AI tests they had to run FP4 for the 5070 - the FP8 model is 11GB (means even 16GB cards will have a tough time) and is known to be half speed of FP4, besides not being able to run on the 5070. This is why they hobbled the 4090 as much as possible for that test. Everybody knows the 5070 will be slower than the 4090. That is a given, the question is by how much. If you lose 25% performance and keep to a 300W power window at half or a third the price, it will still be a great card as long as you can live with half the framebuffer.

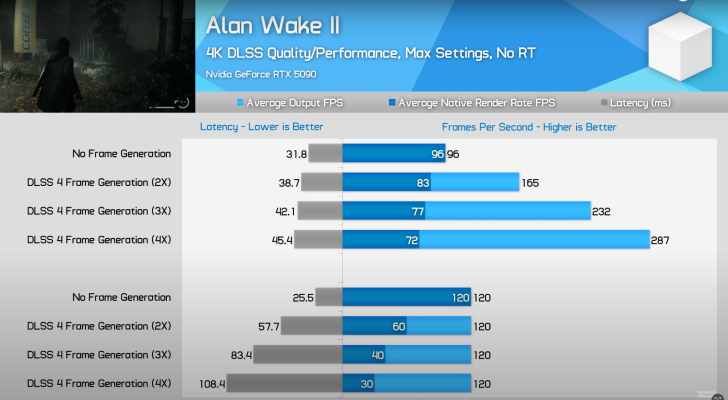

All of this does not excuse the fact that the numbers shown at CES are heavily gamed to fit the narrative that marketing chose to go with. A like for like comparison would have been better, but billion dollar copanies aren't paragons of honesty to begin with.