yes. it will be limited with gen 3 speed, that's it.Given there's no real discernable difference between both generations with regards to SSD performance, using a PCIe 3.0 capable processor with a PCIe 4.0 SSD should be the same as a PCIe 4.0 processor with a PCIe 4.0 SSD. Since PCIe 4.0 is backwards compatible, would run fine.

Ryzen 5000 APUs launched in India.

- Thread starter Sumit74

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Stronk

Herald

And even that would likely only affect sequential speed, random shouldn't be that big of a hit compared to Gen 3, if at all.yes. it will be limited with gen 3 speed, that's it.

Party Monger

Juggernaut

Its just one of those good to have things..Have you ever noticed any difference between PCIe 3.0 and PCIe 4.0? I'm running my GPU on PCIe 4.0 and have tested it out in 3.0 and not seen any real difference.

eTernity2021

Forerunner

please post screenshots, it's not going off topic, coz it really helps others and it will be a Eye-opener, I will be satisfied, a CPU that takes only 10% for a 4K video with 4 cores or less and its associated GPU will be good.I can illustrate with screenshots from my system but it's already going off topic.

Even Amlogic S908X can decode 8k 60fps, Usually 8k format is AV1 and minimum HW support starts from Nvidia 3000 series or AMD RDNA2

as for the RAM , even 1GB will do.

please forgive me, i do also have a silly question for you, u have recently opened a THREAD for Samsung 980 NVMe SSD sales, Y ARE U SELLING IT? THE REAL GOOD REASON. coz, over the Internet, Samsung 980 does not have good reviews, as opposed to it's PRO counterpart Samsung 980 PRO, Did u feel SAMSUNG 980 is not worth it??Now I wonder why I ask such silly questions sometimes

Stronk

Herald

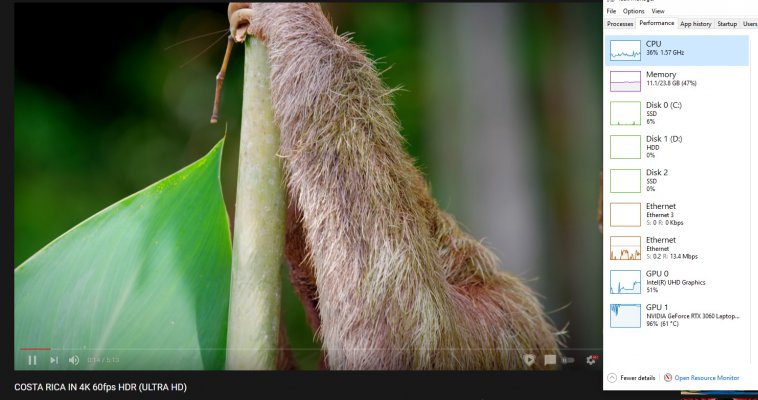

I have attached a screenshot. I'm currently doing some work with a few browsers and applications open in the bg, but you can see that as soon as I play a 4k60fps video, it is handled entirely by the iGPU. CPU spiked to about 50% for half a second as I pressed the play button, but after that initial spike I saw no difference in CPU usage, only iGPU usage. Going forward, and even today, hardware acceleration for videos is going to become more and more prevalent and that will be a significant load off, at least for playback, for ultra high res stuff such as 8K and beyond.please post screenshots, it's not going off topic, coz it really helps others and it will be a Eye-opener, I will be satisfied, a CPU that takes only 10% for a 4K video with 4 cores or less and its associated GPU will be good.

Forgot to mention, CPU is a 8c16t Intel i7 10870H with a lowly UHD 630.

Attachments

eTernity2021

Forerunner

is that a LAPTOP, then, the player downscales the 4K Video to HD, and it will not be a eligible case for CONSIDERATION. Moreover, i c discrepancies, like 3060 is hitting 90+% and the iGPU is 50% and the proccy is 36%, that is kinda odd.I have attached a screenshot. I'm currently doing some work with a few browsers and applications open in the bg, but you can see that as soon as I play a 4k60fps video, it is handled entirely by the iGPU. CPU spiked to about 50% for half a second as I pressed the play button, but after that initial spike I saw no difference in CPU usage, only iGPU usage. Going forward, and even today, hardware acceleration for videos is going to become more and more prevalent and that will be a significant load off, at least for playback, for ultra high res stuff such as 8K and beyond.

The right case would be, THE CPU u Claim must have 4 CORES, Speed Less than 5 GHz and must have Hardware acceleration, in iGPU or A dedicated GPU, like 1070 - 3070. and then post multiple pics with multiple cores as well.

Stronk

Herald

No it doesn't, it's playing in full quality. See the attachment here. You can see in stats for nerds that it's playing in native res.is that a LAPTOP, then, the player downscales the 4K Video to HD

Yes, because as I mentioned I am also currently using my laptop. But look at the delta before and after the video has started (the point where iGPU usage jumps), it's practically none.Moreover, i c discrepancies, like 3060 is hitting 90+% and the iGPU is 50% and the proccy is 36%, that is kinda odd.

Agreed, but I just wanted to demonstrate that 4k60 doesn't use CPU even today, provided you have hardware accceleration. Admittedly, the iGPU is dropping about 15% of the frames, but I'm sure my 3060 can make light job of it. And if you're concerned about the clockspeed, I think that should be fine since I have disabled turbo and increased Speedshift value (since I'm rendering via 3060 in background and don't really need CPU power for now, and that helps lower temps) so that it always stays under 2ghz with current settings. So technically, in it's current state, it has lesser power than a 7700K locked at 5ghz.The right case would be, THE CPU u Claim must have 4 CORES, Speed Less than 5 GHz and must have Hardware acceleration, in iGPU or A dedicated GPU, like 1070 - 3070. and then post multiple pics with multiple cores as well.

Again, this is not a scientific test by any means, but just to demonstrate that hardware acceleration is a thing and it works. Would have loved to show you how the same video plays if I switch off hardware acceleration in browser settings, or use the nvidia GPU, but that will have to wait for later as I'm currently using my laptop lol

Hopefully this shows how hw acceleration is poised to help super high res videos' playback.

So basically, if you are using (integrated) GPU for hardware acceleration, CPU should not matter - pretty sure a core 2 duo can playback 8k video, provided it has a powerful enough GPU

Attachments

eTernity2021

Forerunner

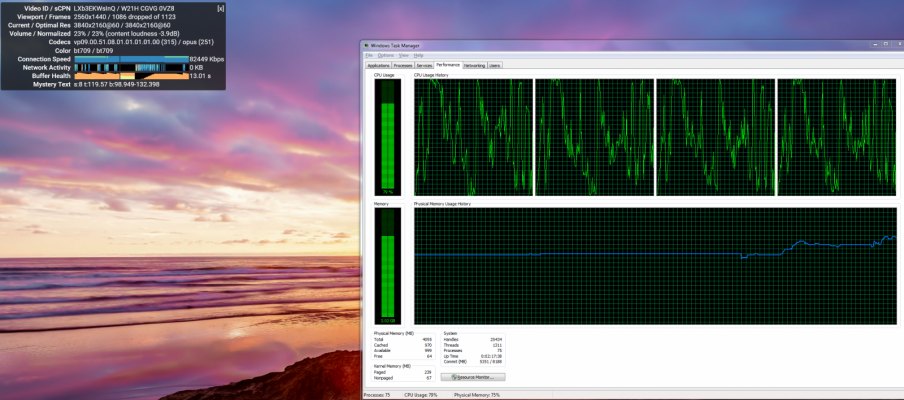

LOL come on bro, the viewport is not even HD, it is 720p, In the stats for nerds, so, it's not the right system to demonstrate 4K. i have a CORE 2 QUAD Machine that's 11 years old, which can play 8K videos in HDNo it doesn't, it's playing in full quality. See the attachment here. You can see in stats for nerds that it's playing in native res.

Yes, because as I mentioned I am also currently using my laptop. But look at the delta before and after the video has started (the point where iGPU usage jumps), it's practically none.

Agreed, but I just wanted to demonstrate that 4k60 doesn't use CPU even today, provided you have hardware accceleration. Admittedly, the iGPU is dropping about 15% of the frames, but I'm sure my 3060 can make light job of it. And if you're concerned about the clockspeed, I think that should be fine since I have disabled turbo and increased Speedshift value (since I'm rendering via 3060 in background and don't really need CPU power for now, and that helps lower temps) so that it always stays under 2ghz with current settings. So technically, in it's current state, it has lesser power than a 7700K locked at 5ghz.

Again, this is not a scientific test by any means, but just to demonstrate that hardware acceleration is a thing and it works. Would have loved to show you how the same video plays if I switch off hardware acceleration in browser settings, or use the nvidia GPU, but that will have to wait for later as I'm currently using my laptop lol

Hopefully this shows how hw acceleration is poised to help super high res videos' playback.

if at all the browsing speed is 150Mbps.

if at all the browsing speed is 150Mbps.But, i appreciate your interest to help others. I understand Hardware acceleration is Something, But, i want concrete PROCCY, i am wondering from which version of Intel CPU say Kaby lake, and AMD say it started with ZEN or ZEN1 or ZEN 3. I want such a Screenshot.

I am sorry to say Your LAPTOP is not hitting Native Resolution for 4K, but that is the fact, try it with a Desktop and 4K supporting monitor. and come up with real CPU usage values.

i hv attached 2K viewport with C2QSo basically, if you are using (integrated) GPU for hardware acceleration, CPU should not matter - pretty sure a core 2 duo can playback 8k video, provided it has a powerful enough GPU

Attachments

Last edited:

Stronk

Herald

Yep, you were right. I retested it by connecting it to my 4K TV, and now indeed more than 55% of the frames were dropped. But the weird thing is, CPU usage was hardly at 10%, while iGPU was still pegged at only 50%. I forgot to take screenshots, but I think the this was because the CPU was still capped to 2Ghz at before.

This changed as soon as I let it run at default speeds. So now I let the CPU was turbo to 4.1Ghz across all cores, and playing back the same video with 4K viewport and 4k60 selected, it was much smoother - altho dropped frames were still at around 50%, it felt much smoother than before because youtube didn't have to constantly buffer (the loading icon) after every few frames, unlike before where it used to do that even tho the video would have a healthy 20 seconds or so as buffer.

But there was a huge price for this small benefit of smoothness - altho iGPU usage stayed at only 50% (which is strange, the viewport was now true 4K instead of the 720p one before), CPU usage was now at 45-55%. So 45-55% of an 8core 16thread 4.1Ghz processor using iGPU's hardware acceleration to play back compressed 4k60 video. I forgot to take screenshots, or try GPU acceleration with my 3060, but yes, you do seem to be correct in saying that 4k60 playback will require a beefy CPU, and will likely push a quad core CPU to its limits.

Okay I think I figured out why my iGPU was capped to just 50%, despite clearly having the workload to push it beyond that - I had connected my TV to my laptop through the dGPU, but the hardware acceleration was being done on iGPU. I think this may have played a factor in limiting the iGPU's usage, as though the video was being rendered on the iGPU, it was being displayed via the dGPU.

Oh well, I will try to do a better test next time onwards. But your point about high CPU usage still stands.

This changed as soon as I let it run at default speeds. So now I let the CPU was turbo to 4.1Ghz across all cores, and playing back the same video with 4K viewport and 4k60 selected, it was much smoother - altho dropped frames were still at around 50%, it felt much smoother than before because youtube didn't have to constantly buffer (the loading icon) after every few frames, unlike before where it used to do that even tho the video would have a healthy 20 seconds or so as buffer.

But there was a huge price for this small benefit of smoothness - altho iGPU usage stayed at only 50% (which is strange, the viewport was now true 4K instead of the 720p one before), CPU usage was now at 45-55%. So 45-55% of an 8core 16thread 4.1Ghz processor using iGPU's hardware acceleration to play back compressed 4k60 video. I forgot to take screenshots, or try GPU acceleration with my 3060, but yes, you do seem to be correct in saying that 4k60 playback will require a beefy CPU, and will likely push a quad core CPU to its limits.

Okay I think I figured out why my iGPU was capped to just 50%, despite clearly having the workload to push it beyond that - I had connected my TV to my laptop through the dGPU, but the hardware acceleration was being done on iGPU. I think this may have played a factor in limiting the iGPU's usage, as though the video was being rendered on the iGPU, it was being displayed via the dGPU.

Oh well, I will try to do a better test next time onwards. But your point about high CPU usage still stands.

Last edited:

eTernity2021

Forerunner

i'll wait until u or other bros post such a snapshot with pure 4k setupYep, you were right. I retested it by connecting it to my 4K TV, and now indeed more than 55% of the frames were dropped. But the weird thing is, CPU usage was hardly at 10%, while iGPU was still pegged at only 50%. I forgot to take screenshots, but I think the this was because the CPU was still capped to 2Ghz at before.

This changed as soon as I let it run at default speeds. So now I let the CPU was turbo to 4.1Ghz across all cores, and playing back the same video with 4K viewport and 4k60 selected, it was much smoother - altho dropped frames were still at around 50%, it felt much smoother than before because youtube didn't have to constantly buffer (the loading icon) after every few frames, unlike before where it used to do that even tho the video would have a healthy 20 seconds or so as buffer.

But there was a huge price for this small benefit of smoothness - altho iGPU usage stayed at only 50% (which is strange, the viewport was now true 4K instead of the 720p one before), CPU usage was now at 45-55%. So 45-55% of an 8core 16thread 4.1Ghz processor using iGPU's hardware acceleration to play back compressed 4k60 video. I forgot to take screenshots, or try GPU acceleration with my 3060, but yes, you do seem to be correct in saying that 4k60 playback will require a beefy CPU, and will likely push a quad core CPU to its limits.

Okay I think I figured out why my iGPU was capped to just 50%, despite clearly having the workload to push it beyond that - I had connected my TV to my laptop through the dGPU, but the hardware acceleration was being done on iGPU. I think this may have played a factor in limiting the iGPU's usage, as though the video was being rendered on the iGPU, it was being displayed via the dGPU.

Oh well, I will try to do a better test next time onwards. But your point about high CPU usage still stands.

Well, I'll just say the above convo went above my head. I'm getting 8-10 frames dropped here at 2K  with CPU usage averaging 24% and nothing really on the GPU. Pretty sure I'm doing this incorrectly lol

with CPU usage averaging 24% and nothing really on the GPU. Pretty sure I'm doing this incorrectly lol

Edit: GPU is def being utilized, spikes to 100%. Shouldn't have trusted Task Manager. Average GPU usage is close to 40% and CPU is about 24%. No frame drops though in this run. And without H/W acceleration, the CPU/GPU usage averages kind of swap places with some frame drops this time.

with CPU usage averaging 24% and nothing really on the GPU. Pretty sure I'm doing this incorrectly lol

with CPU usage averaging 24% and nothing really on the GPU. Pretty sure I'm doing this incorrectly lolEdit: GPU is def being utilized, spikes to 100%. Shouldn't have trusted Task Manager. Average GPU usage is close to 40% and CPU is about 24%. No frame drops though in this run. And without H/W acceleration, the CPU/GPU usage averages kind of swap places with some frame drops this time.

Last edited:

Was going to buy this one, but ended up going for 5600x my friend lend me gpu for couple of months

Synth-Pop

Herald

please post screenshots, it's not going off topic, coz it really helps others and it will be a Eye-opener, I will be satisfied, a CPU that takes only 10% for a 4K video with 4 cores or less and its associated GPU will be good.

please forgive me, i do also have a silly question for you, u have recently opened a THREAD for Samsung 980 NVMe SSD sales, Y ARE U SELLING IT? THE REAL GOOD REASON. coz, over the Internet, Samsung 980 does not have good reviews, as opposed to it's PRO counterpart Samsung 980 PRO, Did u feel SAMSUNG 980 is not worth it??

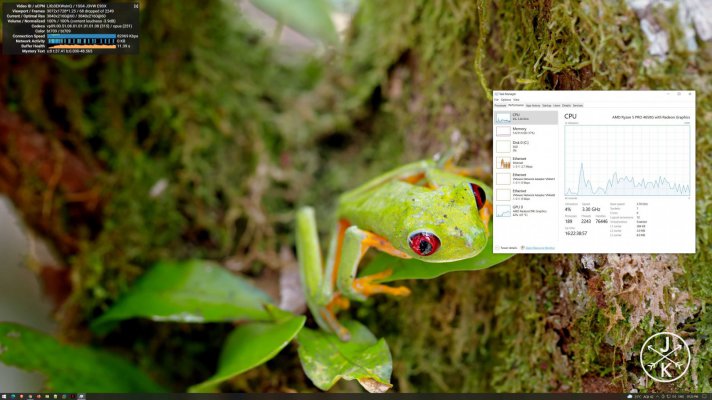

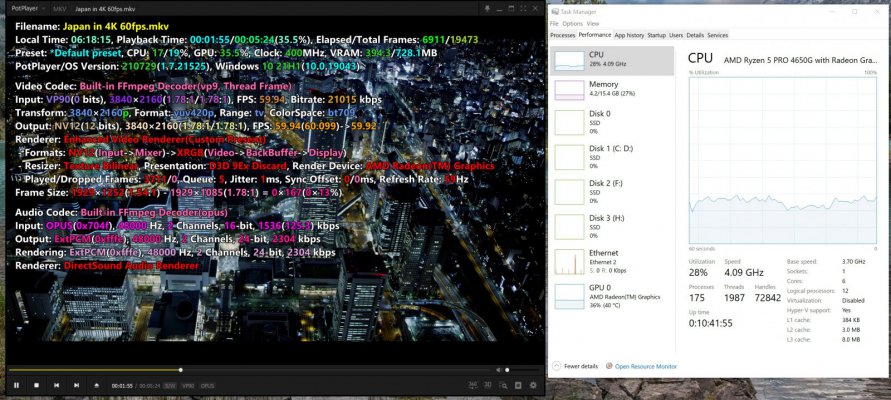

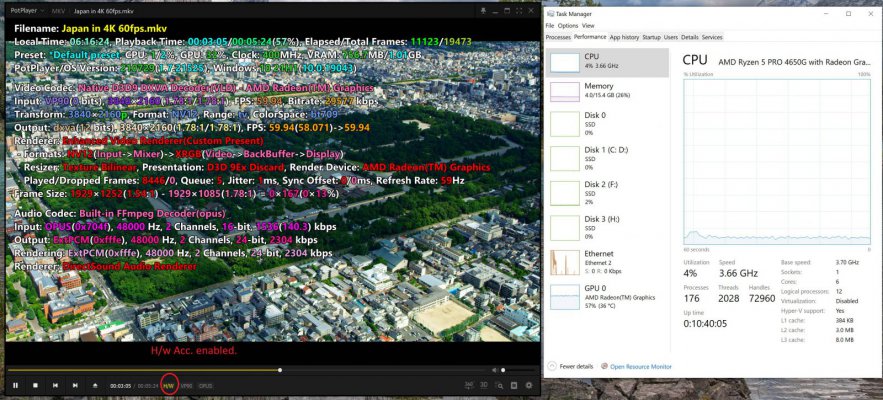

As requested, here're the pics -

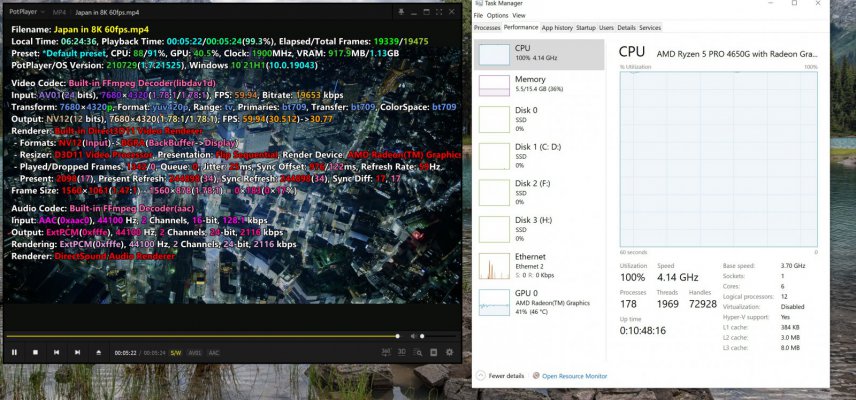

This video is downloaded from youtube, available in both 4k , 8k and below resolutions.

Scenario1: 4k 60fps file with S/w decode.

Scenario2: 4k 60fps with H/w acceleration enabled. (same file as scene1)

Scenario3: 8k 60fps , (same video with different resolution) doesn't support H/w acc. so softdecode only and it stutters mildly but no frame drops.

As I mentioned in my previous post this H/w acc. is supported by RTX 3000 and above cards, not even 2080ti can do.

but surely a faster processor can soft decode without stutter.

For all this testing, I was running Ryzen 4650G only. Already tried with R5 1600 + 1050ti & 4650G + 1050ti too.

same thing, either we need H/w acc support or very fast processor.

@eTernity2021 I'm selling that Samsung 980 NVMe SSD as I've upgraded to 2 TB SSD. I don't care what internet benchmark says but this is really good drive for the price.

may be in benchmarks only but I've not noticed any difference in this vs WD SN750 1TB and Samsung 2TB 970 evo plus or WD SN700 500gb. See above screenshot I'm running 4 NVMe SSD's.

iGPU or External Graphics card must n must support H/w acc. or else it shifts to soft mode and is stress for the CPU, irrelevant of how good your GPU is.So basically, if you are using (integrated) GPU for hardware acceleration, CPU should not matter - pretty sure a core 2 duo can playback 8k video, provided it has a powerful enough GPU

for instance RTX 2000 series can't decode this AV1 format which is usually used in 8k videos.

Last edited:

eTernity2021

Forerunner

First of All, Thanks for Posting such a Detailed Snapshots, i appreciate it. The funny thing is Many are so careless in learning PURE KNOWLEDGE. Right from my Queries about 4K , I am discussing about pure 4K, meaning@eTernity2021 I'm selling that Samsung 980 NVMe SSD as I've upgraded to 2 TB SSD. I don't care what internet benchmark says but this is really good drive for the price.

may be in benchmarks only but I've not noticed any difference in this vs WD SN750 1TB and Samsung 2TB 970 evo plus or WD SN700 500gb. See above screenshot I'm running 4 NVMe SSD's.

1. need to have a 4K Monitor

2. CPU - is to be determined which 1 is good

3. GPU - iGPU or dGPU (as u have mentioned only 3000 series is good for Playback of 4K videos - I still doubt it or Nobody knows yet 4K playback is a mess with the current set of GPUs)

4. VIEWPORT - in your SNAPSHOT it is FRAME-SIZE, and it is HD and Not 4K , the original Video may be 4K but you are downscaling it to HD and trying to present PERFORMANCE is absolutely a Wrong CASE OF TESTING 4K. You are trying to play a 4K VIDEO in HD mode, Your CPU usage is representing only the downscale processing and not pure 4K.

Having Said that, now lets come back to your snapshots,

1. U HAVE USED POT PLAYER - My question is when i use a YOUTUBE 4K Video with 150Mbps bandwidth of internet, in a Browser, if i watch 4k or 8k video, how would the CPU behave, and how do u include GPU into this context.

2. Use VLC Player and then Browser, use internet and then find out is your CPU worthy of playing 4K or is it crying a Bit.

3. Now in the first 2 snapshots, the Frame size is only HD, implying downscaled 4K video, 1st snapshot , CPU usage is shared with iGPU, 28% and 36% respectively, Pot player is cheating your observation.

it is only HD.

, and in the 2nd snapshot, again DOWNSCALED 4K video to HD, iGPU is showing 57%, for a HD show it is too much bro!!!!

, and in the 2nd snapshot, again DOWNSCALED 4K video to HD, iGPU is showing 57%, for a HD show it is too much bro!!!!4. Now coming to the 3rd Snapshot, the frame size is 15xx X 10XX downscaled which is below HD, and it is a 8K video, iGPU is @ 40% , but your CPU is not fit for 8K video processing.

so u have not presented a true 4K Snapshots.......... I am still expecting some 1 to do a solid testing of 4K, my claim is i have not found even a single CPU and GPU together that can playback 4K video in Pure 4K FORMAT in a 4K MONITOR with 10% CPU usage.

or if anybody had please post it, and OPEN YOUR EYES GUYS, Don't cheat yourself with Downscaled VIDEOS.

FYI @Nitendra Singh , POT PLAYER can play 4K even in a 10 year old CPU SMOOTHLY......... in the process, CPU will be crying for HELP.

Synth-Pop

Herald

Mate, I am too busy to explain it further. Surely will discuss later.First of All, Thanks for Posting such a Detailed Snapshots, i appreciate it. The funny thing is Many are so careless in learning PURE KNOWLEDGE. Right from my Queries about 4K , I am discussing about pure 4K, meaning

1. need to have a 4K Monitor

2. CPU - is to be determined which 1 is good

3. GPU - iGPU or dGPU (as u have mentioned only 3000 series is good for Playback of 4K videos - I still doubt it or Nobody knows yet 4K playback is a mess with the current set of GPUs)

4. VIEWPORT - in your SNAPSHOT it is FRAME-SIZE, and it is HD and Not 4K , the original Video may be 4K but you are downscaling it to HD and trying to present PERFORMANCE is absolutely a Wrong CASE OF TESTING 4K. You are trying to play a 4K VIDEO in HD mode, Your CPU usage is representing only the downscale processing and not pure 4K.

Having Said that, now lets come back to your snapshots,

1. U HAVE USED POT PLAYER - My question is when i use a YOUTUBE 4K Video with 150Mbps bandwidth of internet, in a Browser, if i watch 4k or 8k video, how would the CPU behave, and how do u include GPU into this context.

2. Use VLC Player and then Browser, use internet and then find out is your CPU worthy of playing 4K or is it crying a Bit.

3. Now in the first 2 snapshots, the Frame size is only HD, implying downscaled 4K video, 1st snapshot , CPU usage is shared with iGPU, 28% and 36% respectively, Pot player is cheating your observation.

it is only HD., and in the 2nd snapshot, again DOWNSCALED 4K video to HD, iGPU is showing 57%, for a HD show it is too much bro!!!!

4. Now coming to the 3rd Snapshot, the frame size is 15xx X 10XX downscaled which is below HD, and it is a 8K video, iGPU is @ 40% , but your CPU is not fit for 8K video processing.

so u have not presented a true 4K Snapshots.......... I am still expecting some 1 to do a solid testing of 4K, my claim is i have not found even a single CPU and GPU together that can playback 4K video in Pure 4K FORMAT in a 4K MONITOR with 10% CPU usage.

or if anybody had please post it, and OPEN YOUR EYES GUYS, Don't cheat yourself with Downscaled VIDEOS.

FYI @Nitendra Singh , POT PLAYER can play 4K even in a 10 year old CPU SMOOTHLY......... in the process, CPU will be crying for HELP.

anyhow I've a screenshot taken now as you've desired with full 4k Viewport , playing same video at YOUTUBE 4k 60fps using 4650G with 1050ti.

can see CPU swings between 5-20% + GPU between 20-40% which is working more as I've selected "Use hardware acc. when possible" option in my browser.

If I disable it , CPU goes to 50-60% and GPU comes down to 10%.

I 'm using 43" 4k tv as my display with desktop resolution set to 4k.

Now I think you have found a single CPU and GPU together that can playback 4K video in Pure 4K FORMAT in a 4K MONITOR with 10% CPU usage.

eTernity2021

Forerunner

Tks, i took sometime to check myself, what is really different at my end to have a 90% CPU usage, that is with a IVY BRIDGE i5-3570 proccy QUAD CORE not overclocked, Now i have found out an important difference, all these times i was doing the testing in Win 7 OS, i switched to Win 10 OS, with right display drivers installed, by default Hardware acceleration in Browser was enabled, to my surprise, i found CPU usage has come down and swung between 9% and 30% (occasionally it hit 48% like a spike)for a 9 Year old CPU, is really great for me, and the GPU was hitting 51% for handling the 4K playback.Mate, I am too busy to explain it further. Surely will discuss later.

anyhow I've a screenshot taken now as you've desired with full 4k Viewport , playing same video at YOUTUBE 4k 60fps using 4650G with 1050ti.

can see CPU swings between 5-20% + GPU between 20-40% which is working more as I've selected "Use hardware acc. when possible" option in my browser.

If I disable it , CPU goes to 50-60% and GPU comes down to 10%.

I 'm using 43" 4k tv as my display with desktop resolution set to 4k.

Now I think you have found a single CPU and GPU together that can playback 4K video in Pure 4K FORMAT in a 4K MONITOR with 10% CPU usage.

View attachment 111343

For 8K playback same story, it was at 88%-100%, and was stuttering too. that implies, my GPU and yours did not support 8K, in further research, i found a new codec is evolving for royalty free transmission and it's name is AV1, (check it by clicking AV1), 8K is supported in AV1 , so H/W acceleration for 8K is possible only with nvidia 3000 series or INTEL TIGERLAKE/ROCKETLAKE iGPU, and AMD-RDNA2.

Now, again we are still on a stalemate, despite my learning, my proccy is 9 years old, and yours is a current GEN that is on 2021, only 10% difference in the processing is kinda dissatisfying improvement, after all such an investment on a NEW CPU gives only 10% improvement is giving bad taste IMHO. So i am fine with i5-3rd Gen over any new CPUs except for 8K video, which is not in mainstream, if needed i might go for TIGERLAKE/ROCKETLAKE which is kinda distant future. So we are falling for marketing hypes, coz, in both cases, GPU was taking the brunt for 4K processing as the H/W acceleration was engrossed in the GPU. CPU had only few parts to play.

So as far as 4K matters, if u have a i5-3rd gen CPU is more than enough with 4 physical Cores. the OS must be WINDOWS 10. But GPU is the H/W accelerating Champion, hence, for 4K - nvidia 1000 Series is Better, if at all one need 8K, then it requires a switch to AV1 supported GPUs for future proof, my prediction is it's gonna take atleast couple of years...... given the volatile GPU pricing happening all around the world.

so we are good, i found the CPU, and the GPUs.

Synth-Pop

Herald

Exactly, this is what I was telling you. Either GPU with 8k av1 support or some CPU like maybe 5800 x or above for software processing.For 8K playback same story, it was at 88%-100%, and was stuttering too. that implies, my GPU and yours did not support 8K, in further research, i found a new codec is evolving for royalty free transmission and it's name is AV1, (check it by clicking AV1), 8K is supported in AV1 , so H/W acceleration for 8K is possible only with nvidia 3000 series or INTEL TIGERLAKE/ROCKETLAKE iGPU, and AMD-RDNA2.

And this frame viewport is nothing but the size of your media player Window. If you play full screen on a 4K resolution it will match the original 4K resolution of file.

We can't play full 4K resolution in a small media player window, As the resolution of the media player window is itself less than actual 4K.

So we need to push it to full screen.

Last edited:

- Status

- Not open for further replies.