Excuse me, I have a question for you, how did you update the bios? from windows opening the flash.bat file or from a pendrive? what would the procedure be like?It 44 degrees here in UP and there is no AC in my room. Temps are bad in general.(If you have an AC in you room im sure you would see better temps than mines)

I dont play those game but ran DS3 at a locked 60fps with no issue. Temps were around 82-84 degrees. Even my GPU is running hot its usually well below 70 and sits around 65 degrees in normal weather around 30-35 degrees ambient. The fan profile seems similar to the ones you find on a Laptop. It just runs hot by design. Vcore looks to be 1.331V I hope and not 1.550V!

Just a quick update for those who buy this desktop kit and want to game with it. Its better to upgrade from C04 has the windows power plans dont work and the CPU runs much hotter even ST loads.

Upgraded to C06 then C07. Seeing a good decrease in both GPU and CPU temps.

Another update with C09 BIOS. Huge differnces in temps compared to C04.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The_King

Explorer

I did my updates from windows using the batch file. I only used officially released BIOS with all my updates.Excuse me, I have a question for you, how did you update the bios? from windows opening the flash.bat file or from a pendrive? what would the procedure be like?

The update order I used from the stock BIOS version C04 was C06>07>08>09.

Im still on C09 have not updated to C0A.

Some members on here have updated directly to from C04 to C0A that will be the fastest method.

Yes it willWould a nvidia 1660 work with this board?

rsaeon

Innovator

So I've finally got a few of these to play around with. I have a few long-term use-cases in mind but for now I just want to explore the hardware since a platform like this is extremely rare. The last time we had a quirky platform for computing was with AMD's AM1 in 2014 and there has been nothing in between then and this 4700S Desktop Kit. (maybe 2016's Athlon X4 835/845 would count, they were mobile processors in a desktop package)

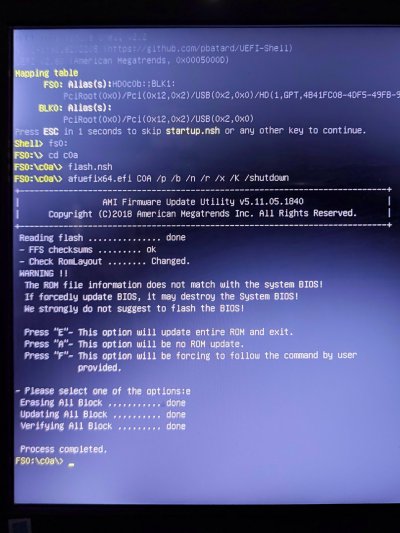

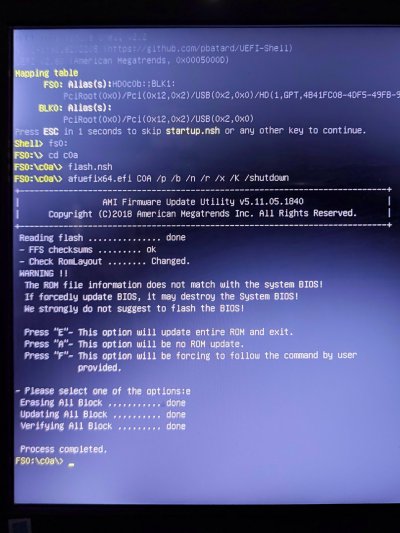

One of the boards had the original C04 bios, so I flashed a UEFI shell using Rufus with a GPT partition map in the recommended ISO writing mode (not EFS) onto a USB2 drive.

I found the UEFI shell here: https://github.com/pbatard/UEFI-Shell, Rufus is at https://rufus.ie/en/ and the BIOS files at https://www.amd.com/en/support/desktop-kit/amd-4700s-desktop-kit/amd-4700s-desktop-kit

I copied over the BIOS folder C0A from the extracted zip and powered on the desktop kit with the USB2 drive plugged into a USB2 (black) port. Flashing directly from C04 to C0A was pretty straightforward:

But for this, there was no auto shutdown as mentioned in the PDF that's in the bios zip or the command line switch in the photo above. I reflashed one of the other boards to C0A this way and that one did shutdown down after completion. The time from power-on to display output has increased by a second or two with the C0A bios compared to the C04.

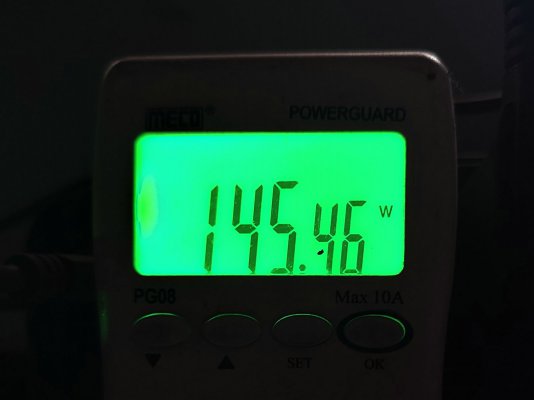

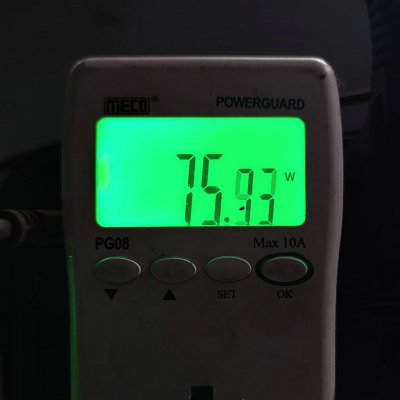

I took some preliminary power consumption readings with a fire hazard of a smps that I have for testing (it draws 8w with nothing plugged in) and it was under 100w at idle and peaking under 160w (145w average) while flashing. The readings were the same before and after bios update. Those are pretty horrible numbers but I think most of that is from the inefficient power supply.

That's it for now.

One of the boards had the original C04 bios, so I flashed a UEFI shell using Rufus with a GPT partition map in the recommended ISO writing mode (not EFS) onto a USB2 drive.

I found the UEFI shell here: https://github.com/pbatard/UEFI-Shell, Rufus is at https://rufus.ie/en/ and the BIOS files at https://www.amd.com/en/support/desktop-kit/amd-4700s-desktop-kit/amd-4700s-desktop-kit

I copied over the BIOS folder C0A from the extracted zip and powered on the desktop kit with the USB2 drive plugged into a USB2 (black) port. Flashing directly from C04 to C0A was pretty straightforward:

But for this, there was no auto shutdown as mentioned in the PDF that's in the bios zip or the command line switch in the photo above. I reflashed one of the other boards to C0A this way and that one did shutdown down after completion. The time from power-on to display output has increased by a second or two with the C0A bios compared to the C04.

I took some preliminary power consumption readings with a fire hazard of a smps that I have for testing (it draws 8w with nothing plugged in) and it was under 100w at idle and peaking under 160w (145w average) while flashing. The readings were the same before and after bios update. Those are pretty horrible numbers but I think most of that is from the inefficient power supply.

That's it for now.

Attachments

I got 2 of these boards and I updated both of them to the latest C04 inside windows! Worked fine.Excuse me, I have a question for you, how did you update the bios? from windows opening the flash.bat file or from a pendrive? what would the procedure be like?

The_King

Explorer

I believe you mean C0A not C04 which is the stock BIOS.I got 2 of these boards and I updated both of them to the latest C04 inside windows! Worked fine.

Please share C04 BIOS ROM file, Thank you.I got 2 of these boards and I updated both of them to the latest C04 inside windows! Worked fine.

rsaeon

Innovator

Changed the power supply to the popular Cooler Master MWE 450 V2, idle power consumption dropped to ~86 in the BIOS and 75w with Proxmox (debian) installed.

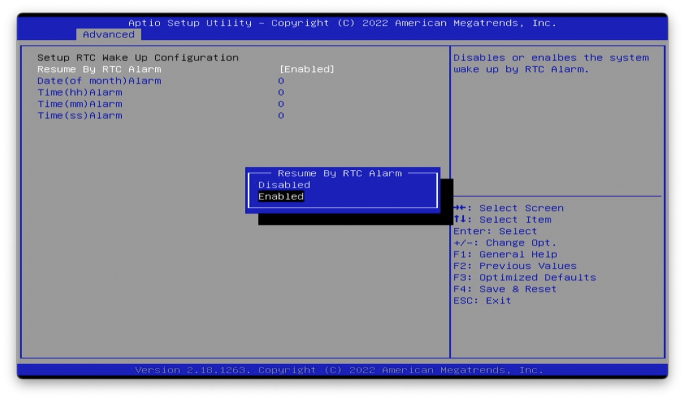

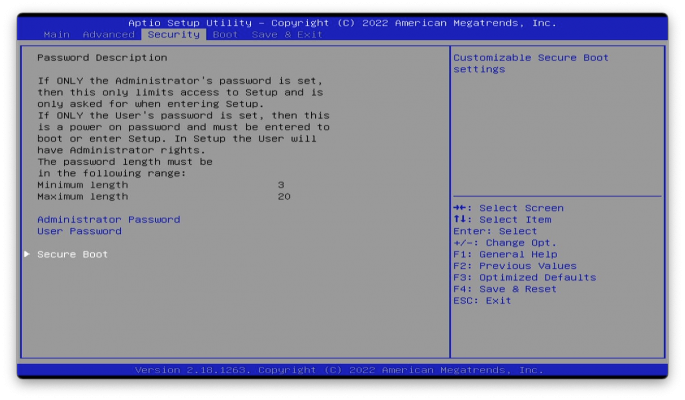

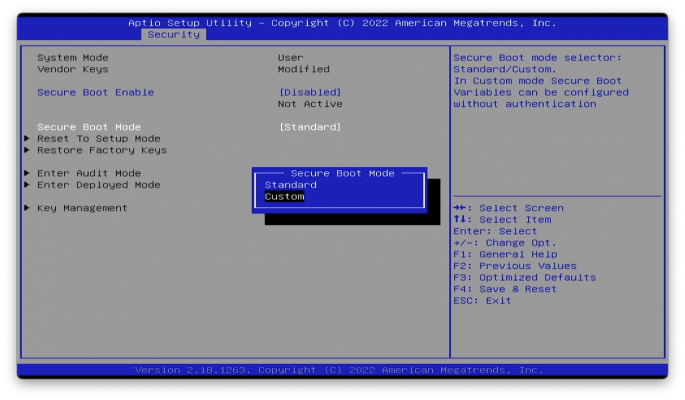

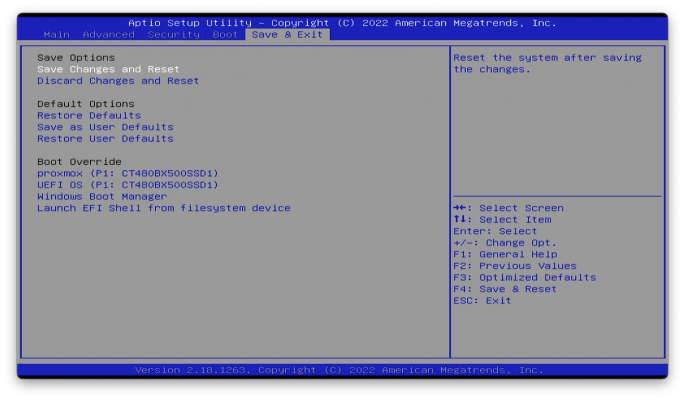

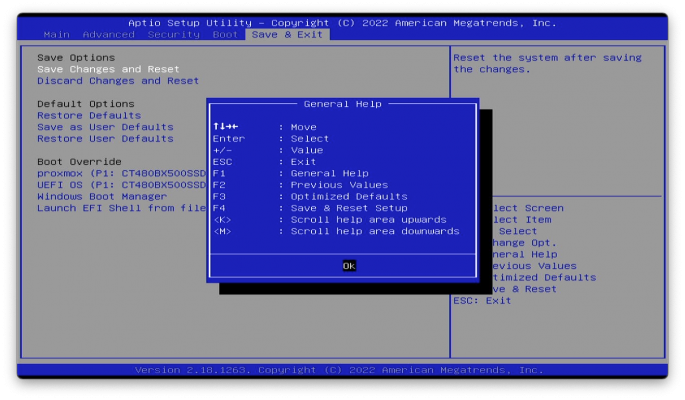

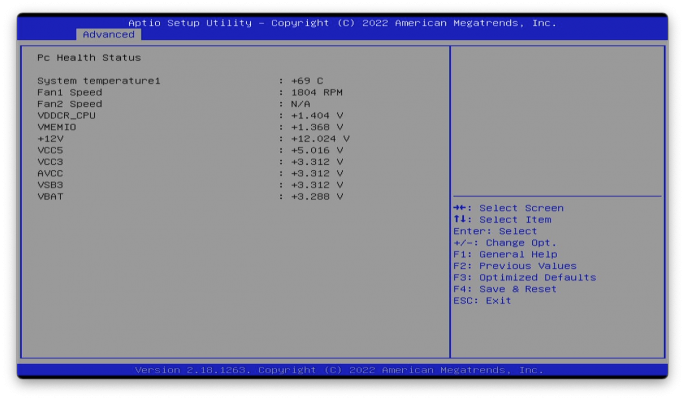

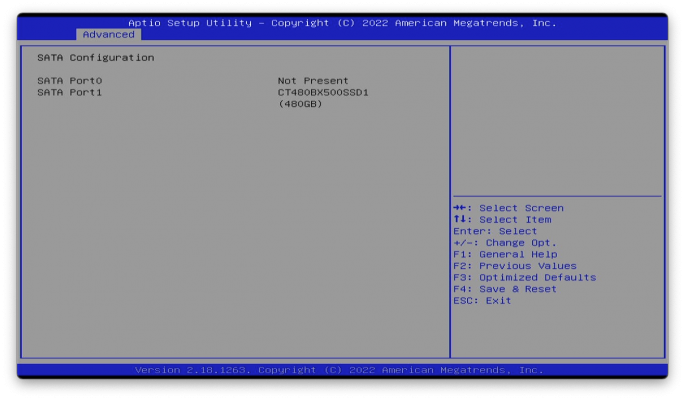

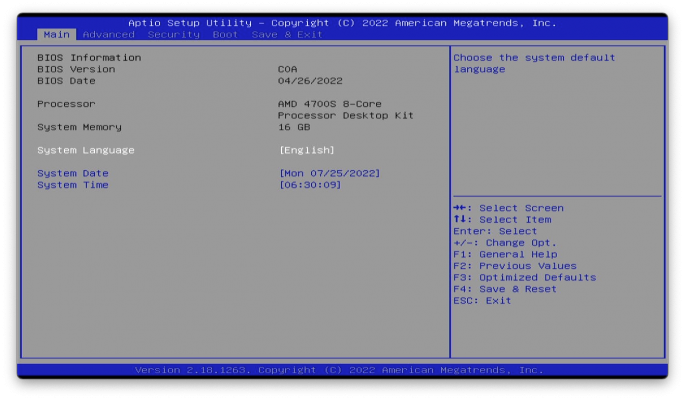

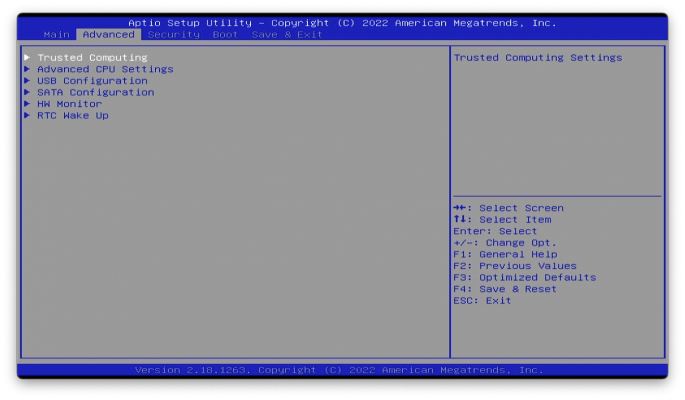

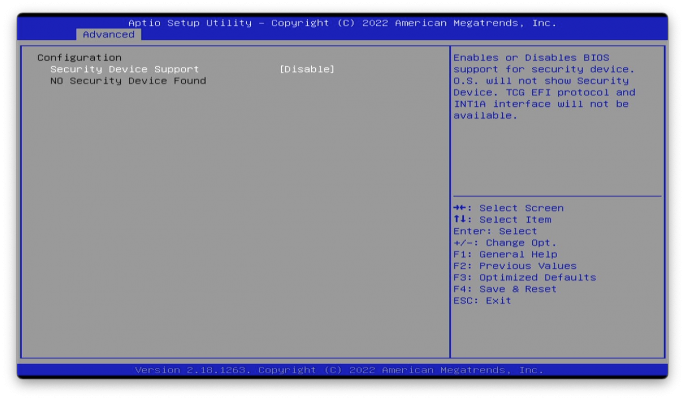

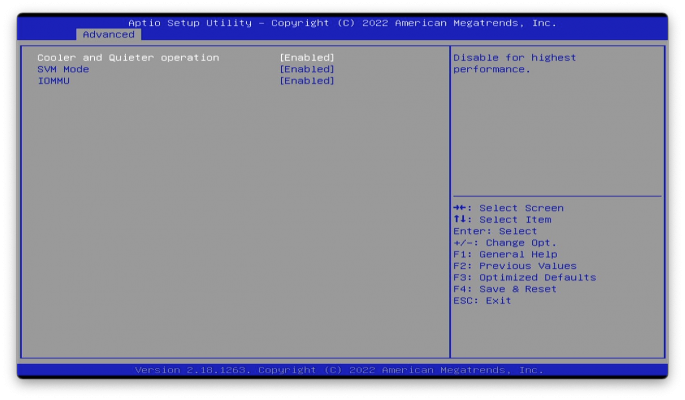

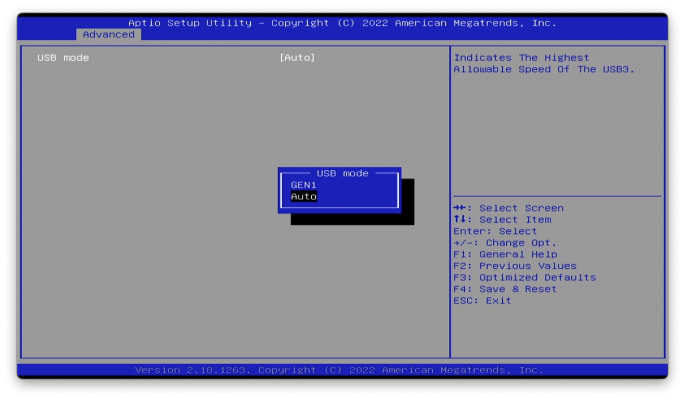

PIcked up a HDMI capture dongle during Prime Day to take better screen captures, attached are the BIOS screens as of version C0A.

Notably missing is the setting to specify to power on after power failure, it stays off. This is not ideal for a virtualization host since you'd want everything back online as soon as possible after a power failure. You could set the RTC timer to start it up but I think this is a once-a-day thing, so it's possible the host will remain offline for upto 24 hours after a power failure. Maybe a timed pulse delay circuit of some sort would be helpful here, triggering the power button once 5VSB (5v standby signal from the power supply) is detected.

It's nice to see virtualization support as well as iommu support in the BIOS.

PIcked up a HDMI capture dongle during Prime Day to take better screen captures, attached are the BIOS screens as of version C0A.

Notably missing is the setting to specify to power on after power failure, it stays off. This is not ideal for a virtualization host since you'd want everything back online as soon as possible after a power failure. You could set the RTC timer to start it up but I think this is a once-a-day thing, so it's possible the host will remain offline for upto 24 hours after a power failure. Maybe a timed pulse delay circuit of some sort would be helpful here, triggering the power button once 5VSB (5v standby signal from the power supply) is detected.

It's nice to see virtualization support as well as iommu support in the BIOS.

Attachments

-

08-rtc-wake-up.png431.6 KB · Views: 490

08-rtc-wake-up.png431.6 KB · Views: 490 -

09-security.png491 KB · Views: 490

09-security.png491 KB · Views: 490 -

10-secure-boot.png498.5 KB · Views: 477

10-secure-boot.png498.5 KB · Views: 477 -

11-boot.png520 KB · Views: 526

11-boot.png520 KB · Views: 526 -

12-save-exit.png506.1 KB · Views: 470

12-save-exit.png506.1 KB · Views: 470 -

13-general-help.png599.9 KB · Views: 474

13-general-help.png599.9 KB · Views: 474 -

07-hw-monitor.png367.3 KB · Views: 452

07-hw-monitor.png367.3 KB · Views: 452 -

06-sata-configuration.png317.4 KB · Views: 440

06-sata-configuration.png317.4 KB · Views: 440 -

photo_2022-07-25 12.14.16.jpeg71.8 KB · Views: 423

photo_2022-07-25 12.14.16.jpeg71.8 KB · Views: 423 -

01-main.png431.2 KB · Views: 466

01-main.png431.2 KB · Views: 466 -

02-advanced.png403.2 KB · Views: 402

02-advanced.png403.2 KB · Views: 402 -

03-trusted-computing.png421.7 KB · Views: 404

03-trusted-computing.png421.7 KB · Views: 404 -

04-advanced-cpu-settings.png348.6 KB · Views: 412

04-advanced-cpu-settings.png348.6 KB · Views: 412 -

05-usb-configuration.png350.6 KB · Views: 436

05-usb-configuration.png350.6 KB · Views: 436

jdp861

Explorer

Power consumption is very high of the machine. Optimizations are way off for the machine by AMD. Wondering if there is way to improve it.Changed the power supply to the popular Cooler Master MWE 450 V2, idle power consumption dropped to ~86 in the BIOS and 75w with Proxmox (debian) installed.

PIcked up a HDMI capture dongle during Prime Day to take better screen captures, attached are the BIOS screens as of version C0A.

Notably missing is the setting to specify to power on after power failure, it stays off. This is not ideal for a virtualization host since you'd want everything back online as soon as possible after a power failure. You could set the RTC timer to start it up but I think this is a once-a-day thing, so it's possible the host will remain offline for upto 24 hours after a power failure. Maybe a timed pulse delay circuit of some sort would be helpful here, triggering the power button once 5VSB (5v standby signal from the power supply) is detected.

It's nice to see virtualization support as well as iommu support in the BIOS.

I have been using this machine as my daily driver now. I have no issues with it except the high temperatures.

RC4A0FRIOS

Beginner

I don't think that 75w of power consumption are bad at all.Power consumption is very high of the machine. Optimizations are way off for the machine by AMD. Wondering if there is way to improve it.

I have been using this machine as my daily driver now. I have no issues with it except the high temperatures.

The board is criticized for being noisy, and that is a totally wrong statement. Mine is sitting in my desk at 30 cm from my face and it's dead silent. Unless you're running benchmarks, but realistically, who on Earth will be using all 8 cores and 16 threads at 100% all the time?

Also, rewiewers say that there is no possible upgradability, which is true, but c'mon: Intel changes main board every two generations and AM4 is on its final days. Most people upgrade their PC's by changing the HDD's, adding RAM and, if they start with a low end GPU, changing it.

I understand that this kit fail to impress in some countries where it's at a high price, but mine cost the same as a Pentium G4560 build, and you have a wonderful mid range PC with some high end features like the high core count and huge memory bandwidth, and some low end ones like the PCIe limitations, only two SATA ports, and lack of M2 support.

jdp861

Explorer

Ideally, i want the power consumtion to be under 40w under idle.I don't think that 75w of power consumption are bad at all.

The board is criticized for being noisy, and that is a totally wrong statement. Mine is sitting in my desk at 30 cm from my face and it's dead silent. Unless you're running benchmarks, but realistically, who on Earth will be using all 8 cores and 16 threads at 100% all the time?

Also, rewiewers say that there is no possible upgradability, which is true, but c'mon: Intel changes main board every two generations and AM4 is on its final days. Most people upgrade their PC's by changing the HDD's, adding RAM and, if they start with a low end GPU, changing it.

I understand that this kit fail to impress in some countries where it's at a high price, but mine cost the same as a Pentium G4560 build, and you have a wonderful mid range PC with some high end features like the high core count and huge memory bandwidth, and some low end ones like the PCIe limitations, only two SATA ports, and lack of M2 support.

Regarding being noisy, under normal conditions it is not noisy, but under load can a bit noisy, but who is complaining at this price point.

It is definitely a good PC at this price. And I am loving it.

There are couple of things i noticed recently.

On win10 the temp of cpu never went above 95C but yesterday, I installed win11 on it and the temp kept rising above 95C. I only let it rise till 98C then switched the power mode from balanced to powersave to bring it down.

Also a few times, I faced another issue with win 11. After shutting the machine down, whenever I mouse clicked, it turned on the machine.

I would also like to make a mount for my cpu cooler but i don't have any experience with CAD designing. I have a gammax 400 lyiing around. Maybe some day I would give autocad a try.

rsaeon

Innovator

Power consumption is something I'd like to explore more later on, the 75w number was with AMD Cool n Quiet turned on in the BIOS.

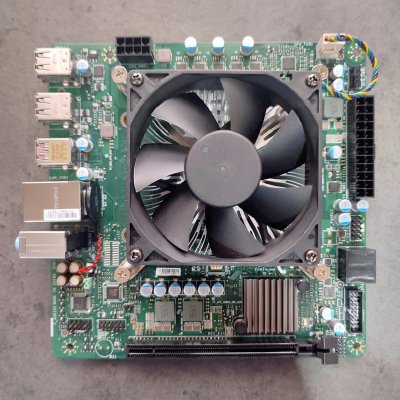

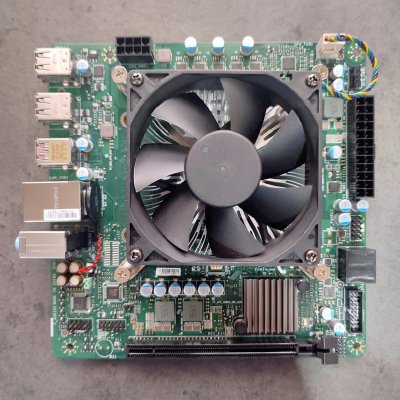

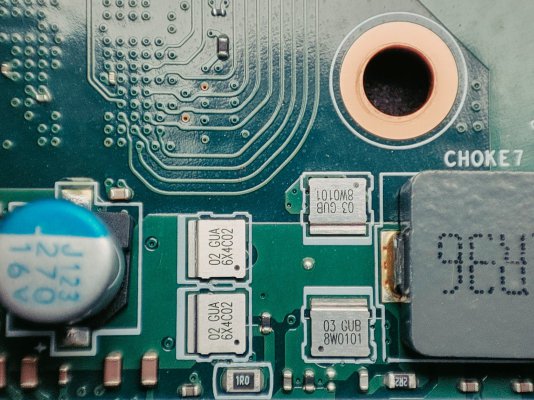

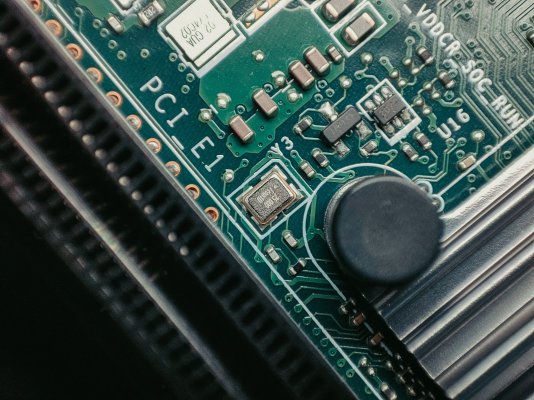

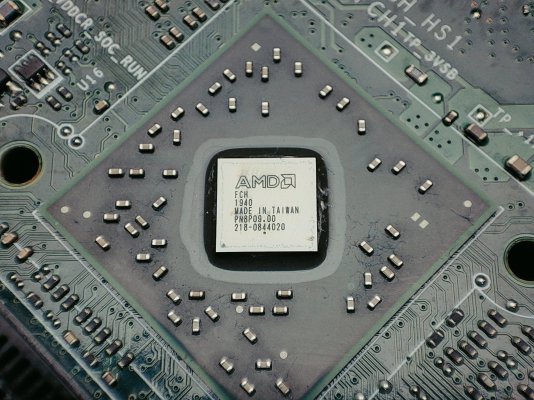

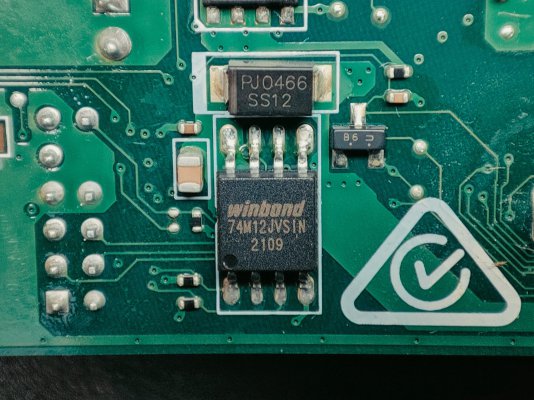

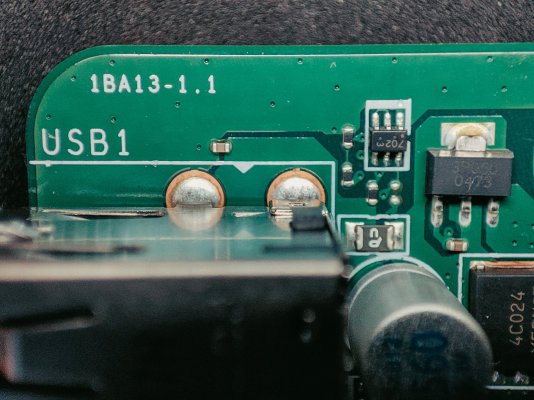

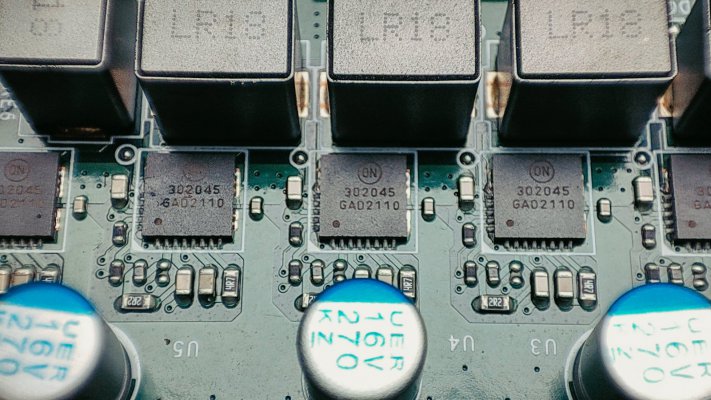

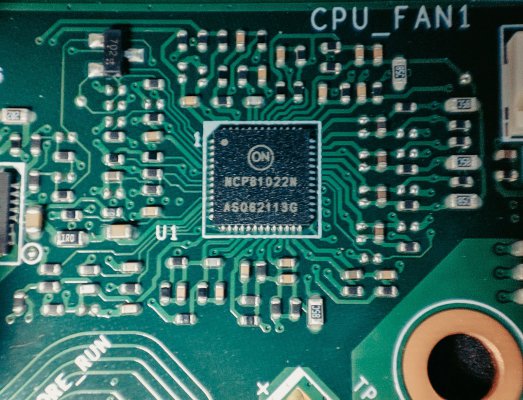

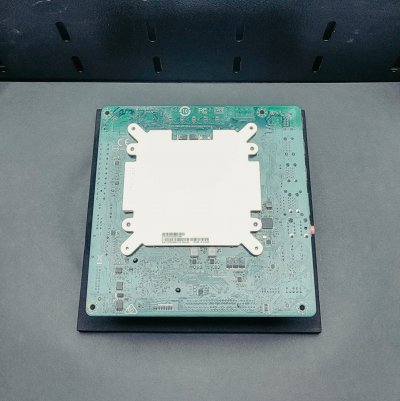

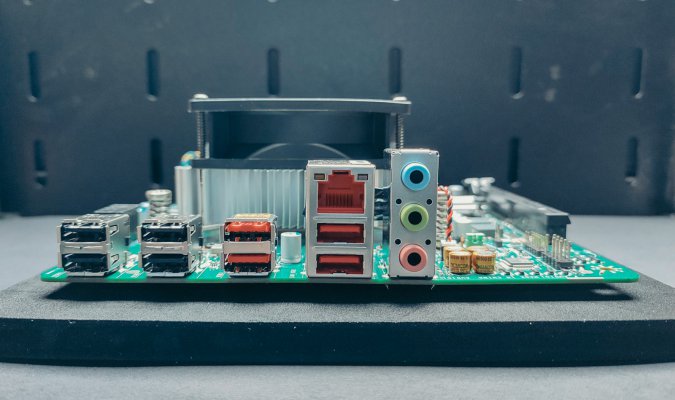

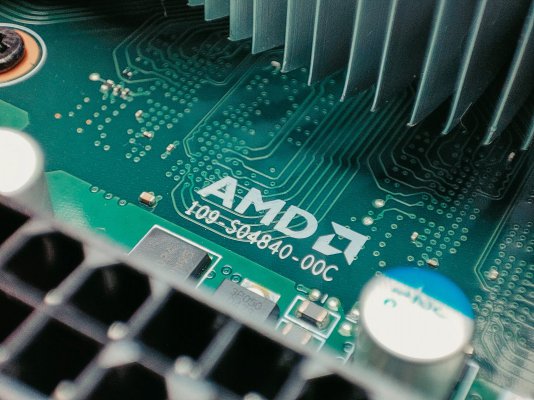

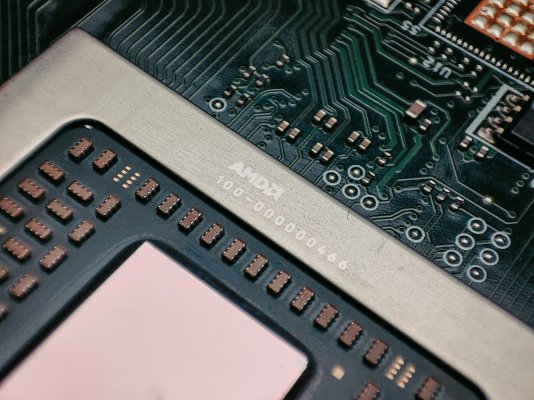

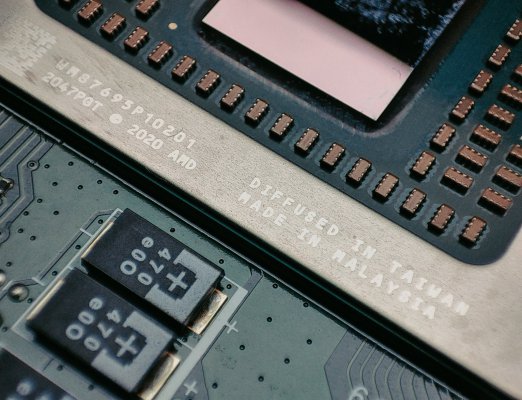

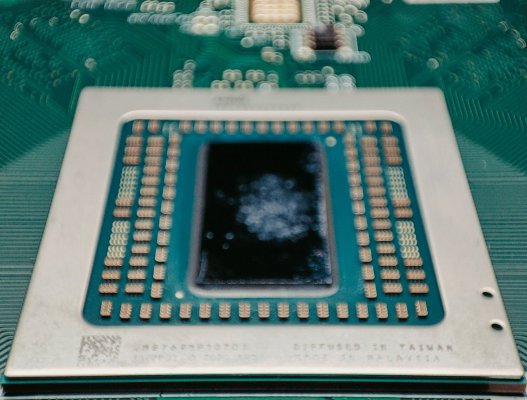

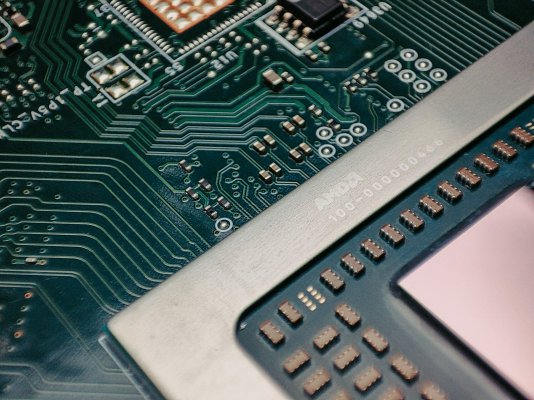

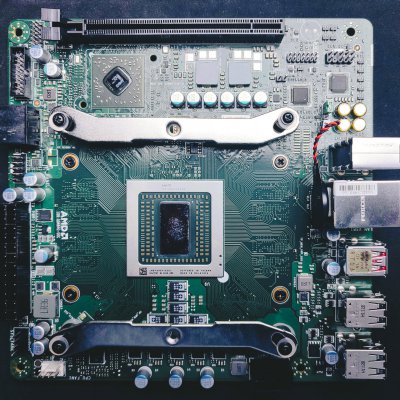

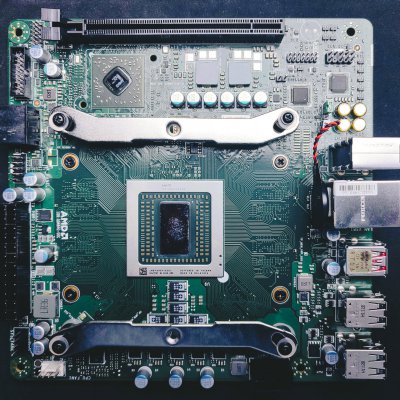

I've attached a photo essay of the hardware. Some notable chips on this board:

Power Delivery/VRMs: NCP81022N & ON 302045

Audio Codec: ALC897

Memory: SKhynix H56CBM24MIR S2C

Ethernet: ASIX AX88179 QF

BIOS: Winbond W25Q128JV (marked as 74M12JVSIN)

Other quirky stuff include a solitary power-on red led, a couple of 25MHz crystals, and some shiny components I couldn't immediately identify.

The board has two identifying numbers, 109-S04840-00C which is probably a part number, and 1BA13-1.1, which is probably a revision code.

Unrelated, I've now become disenchanted smartphone macro cameras because of the diffraction on the edges of the frame.

.

I've attached a photo essay of the hardware. Some notable chips on this board:

Power Delivery/VRMs: NCP81022N & ON 302045

Audio Codec: ALC897

Memory: SKhynix H56CBM24MIR S2C

Ethernet: ASIX AX88179 QF

BIOS: Winbond W25Q128JV (marked as 74M12JVSIN)

Other quirky stuff include a solitary power-on red led, a couple of 25MHz crystals, and some shiny components I couldn't immediately identify.

The board has two identifying numbers, 109-S04840-00C which is probably a part number, and 1BA13-1.1, which is probably a revision code.

Unrelated, I've now become disenchanted smartphone macro cameras because of the diffraction on the edges of the frame.

.

Attachments

-

amd_4700s-12.jpg568.3 KB · Views: 377

amd_4700s-12.jpg568.3 KB · Views: 377 -

amd_4700s-13.jpg486.3 KB · Views: 391

amd_4700s-13.jpg486.3 KB · Views: 391 -

amd_4700s-14.jpg459.9 KB · Views: 398

amd_4700s-14.jpg459.9 KB · Views: 398 -

amd_4700s-15.jpg489.9 KB · Views: 432

amd_4700s-15.jpg489.9 KB · Views: 432 -

amd_4700s-16.jpg460.8 KB · Views: 413

amd_4700s-16.jpg460.8 KB · Views: 413 -

amd_4700s-17.jpg476 KB · Views: 389

amd_4700s-17.jpg476 KB · Views: 389 -

amd_4700s-18.jpg443.1 KB · Views: 383

amd_4700s-18.jpg443.1 KB · Views: 383 -

amd_4700s-19.jpg422.4 KB · Views: 386

amd_4700s-19.jpg422.4 KB · Views: 386 -

amd_4700s-11.jpg614.8 KB · Views: 355

amd_4700s-11.jpg614.8 KB · Views: 355 -

amd_4700s-10.jpg330.2 KB · Views: 337

amd_4700s-10.jpg330.2 KB · Views: 337 -

amd_4700s-09.jpg520.3 KB · Views: 346

amd_4700s-09.jpg520.3 KB · Views: 346 -

amd_4700s-01.jpg384.9 KB · Views: 378

amd_4700s-01.jpg384.9 KB · Views: 378 -

amd_4700s-02.jpg454.2 KB · Views: 358

amd_4700s-02.jpg454.2 KB · Views: 358 -

amd_4700s-03.jpg236.7 KB · Views: 344

amd_4700s-03.jpg236.7 KB · Views: 344 -

amd_4700s-04.jpg475.2 KB · Views: 347

amd_4700s-04.jpg475.2 KB · Views: 347 -

amd_4700s-05.jpg453.5 KB · Views: 362

amd_4700s-05.jpg453.5 KB · Views: 362 -

amd_4700s-06.jpg478.5 KB · Views: 332

amd_4700s-06.jpg478.5 KB · Views: 332 -

amd_4700s-07.jpg359.6 KB · Views: 341

amd_4700s-07.jpg359.6 KB · Views: 341 -

amd_4700s-08.jpg472.8 KB · Views: 347

amd_4700s-08.jpg472.8 KB · Views: 347

The basic premise of the board is very good indeed but there were a few more issues that I came across.

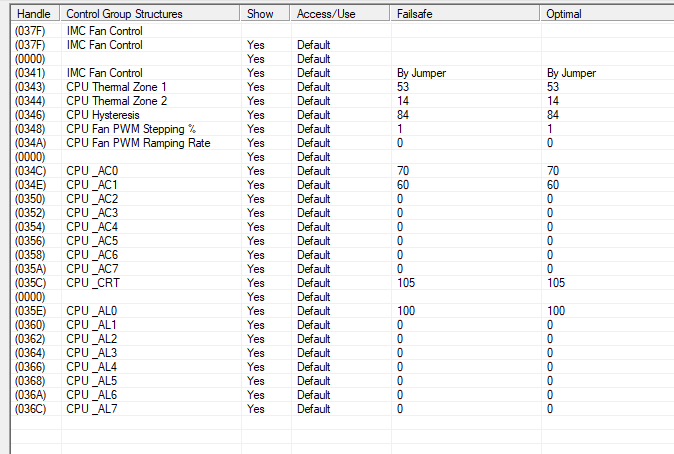

The fan control is basically non existent and horrible. The fans run full speed if I use a non PWM 3 pin fan. Beyond that, there is absolutely no way to adjust any fan setting - no option in the BIOS and none of the available fan control software work. Just now I revisited the BIOS and found more fan settings which should be unlocked and accessible via the C0A modded BIOS. I should have tried these when I still had the board.

It gets so bad, the CPU fan wants to just run at 1000RPM at idle - even if I use a 120mm fan. The other fan header always runs fans close to the minimum speed - with the Arctic P12. with a 120mm CPU fan, the hot air just keeps swirling inside all the time, it gets very difficult to exhaust anything out because other fan control just doesnt work.

This got so bad, I almost completed a custom loop to fix these heat issues. Now I've come to a realisation that to get this setup actually running quiet, the bare minimum is a 120mm AIO with liquid metal.

My board had the AMD USB bug on its USB 2.0 ports where my custom keyboard based on VUSB implementation kept restarting every 10 seconds.

The fan control is basically non existent and horrible. The fans run full speed if I use a non PWM 3 pin fan. Beyond that, there is absolutely no way to adjust any fan setting - no option in the BIOS and none of the available fan control software work. Just now I revisited the BIOS and found more fan settings which should be unlocked and accessible via the C0A modded BIOS. I should have tried these when I still had the board.

It gets so bad, the CPU fan wants to just run at 1000RPM at idle - even if I use a 120mm fan. The other fan header always runs fans close to the minimum speed - with the Arctic P12. with a 120mm CPU fan, the hot air just keeps swirling inside all the time, it gets very difficult to exhaust anything out because other fan control just doesnt work.

This got so bad, I almost completed a custom loop to fix these heat issues. Now I've come to a realisation that to get this setup actually running quiet, the bare minimum is a 120mm AIO with liquid metal.

My board had the AMD USB bug on its USB 2.0 ports where my custom keyboard based on VUSB implementation kept restarting every 10 seconds.

The Ethernet actually runs off one of the USB ports and not directly through the CPUEthernet: ASIX AX88179 QF

I have shared a template for 115x to 4700 adapter if you revisit my posts on this thread. Go to a laser cutter and get it done.I would also like to make a mount for my cpu cooler but i don't have any experience with CAD designing. I have a gammax 400 lyiing around. Maybe some day I would give autocad a try.

rsaeon

Innovator

The Ethernet actually runs off one of the USB ports and not directly through the CPU

This is of concern to me. The younger generation of tinkerers have no hesitations about relying on usb or wifi for critical applications (there's so many people using the Raspberry Pi Zero W for pihole on wifi, ack) but for those us who lived through decades of USB issues like frequent disconnects and buggy drivers, it's simply not an option.

I'm intending to use this in a proxmox cluster that requires at least three ethernet connections (corosync, migration, vm data) so ideally an expansion card with a PLX chip would be best. But those are in the hundreds of dollars, the cheapest being those 4x m.2 NVME cards with a PLX chip that are available for under $200 with more functional ones for twice that much by https://c-payne.com/collections/pcie-packet-switch-plx-pcbs

Apart from the price, they're also high bandwidth, which isn't necessary here. Gigabit has a theoretical limit of 1000/8=125MB/s for throughput. A single PCIe 1.0 lane is 2Gb/s or 250MB/s. So a PCIe 2.0 x1 lane would be twice that or 4Gb/s or 500MB/s — that's plenty of bandwith for 3 or 4 gigabit connections.

I could use a quad port network card but those are not readily available, and they start at 4500 refurbished and go up to 10k. Not exactly cost effective to replace and I do require a solution that would be modular enough to replace quickly and easily. Also using a quad port network card would remove the graphics card and while this platform could run headless, I do also require video out for troubleshooting.

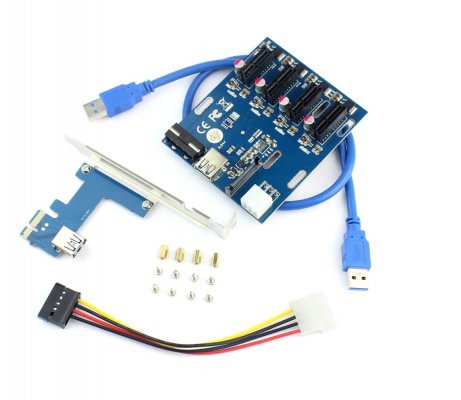

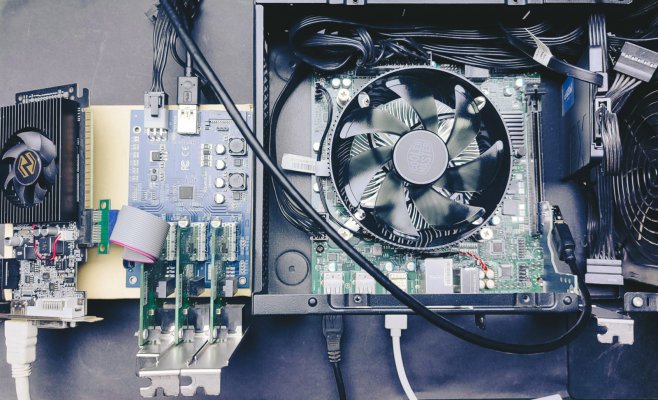

So I need at least three PCIe based ethernet interfaces and a graphics card. In a unexpected twist, there is something uniquely suited for this — I guess I have the "mining bros" to thank for the existence of this:

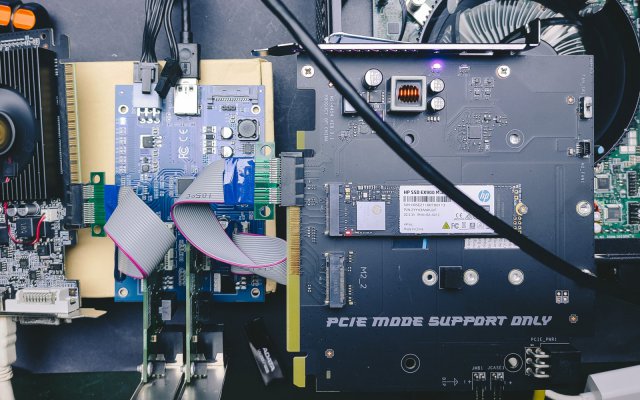

This is a 1x slot PCIe 2.0 x1 to 4x slot daughter board, based on the ASMedia 1184e PCIe 2.0 Packet Switch: https://www.asmedia.com.tw/product/556yQ9dSX7gP9Tuf/b7FyQBCxz2URbzg0 This first appeared on a motherboard way back in the Z97 era so it is tried and tested and stable. It works just like a PLX chip, but with x1 lanes instead of x8 and just like the PLX solution, it's driverless. Primary use case is for adding low bandwith interfaces to motherboards like wifi or gigabit.

An addition like this adds about 3k to the cost of the AMD 4700S system, bringing the total for CPU+Motherboard+Ram+This to 12k, which is still very much acceptable seeing that the Ryzen 7 2700 alone goes for 11k to 12k these days.

I have a few of these x1 to x1 extension cables from my 2013 mining adventure that I've kept around solely because of the physical effort expended to shave off the ends by hand since I had no power tools at the time:

And so with everything connected (1x graphics card, 3x ethernet adapters):

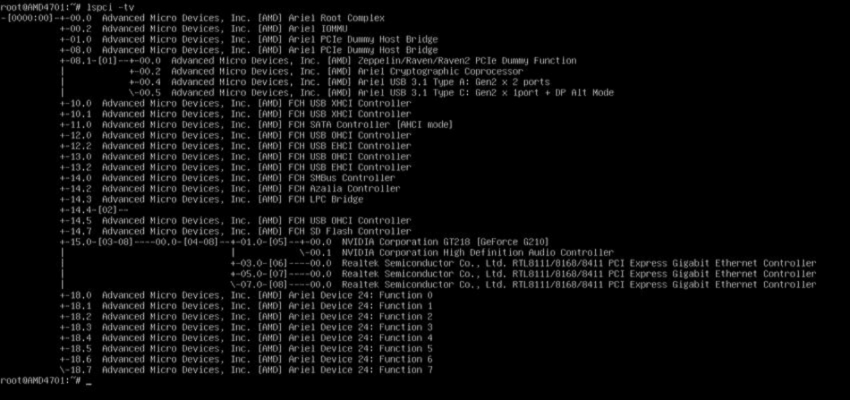

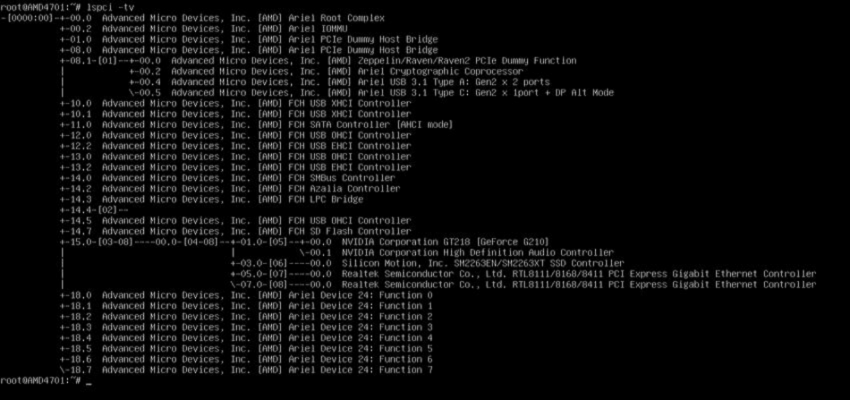

Sure enough, all devices are accounted for:

Now the next step was to see if nvme drives would work, not that I needed this, but I wanted to know:

And yes, it was detected as well:

However, there's no NVME boot support in the BIOS, so we'd need something like a bootloader on a sata drive or dom in order to boot off an NVME drive.

But then the 500MB/s limitations of the PCIe 2.0 packet switch would probably render an nvme drive impractical apart from not needing a SATA power cable for a storage device. The testing confirms this:

Just under 400MB/s, which I suspect is the limitation of the NVMe controller on the SSD operating at x1 instead of overhead by the PCIe packet switch card. SATA by comparison:

Just under 550MB/s is about as best we can expect considering it is a DRAM-less drive (Crucial BX500).

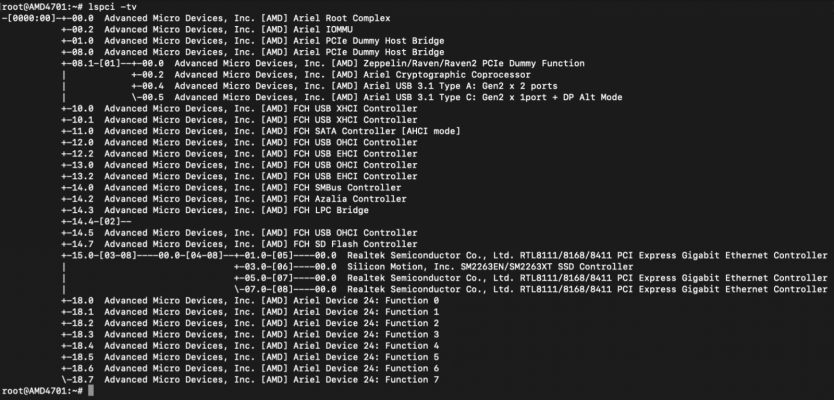

The next test was to confirm if it'll work headless, I removed the graphics card and powered it on with three ethernet adapters and the nvme drive and everything was detected as expected:

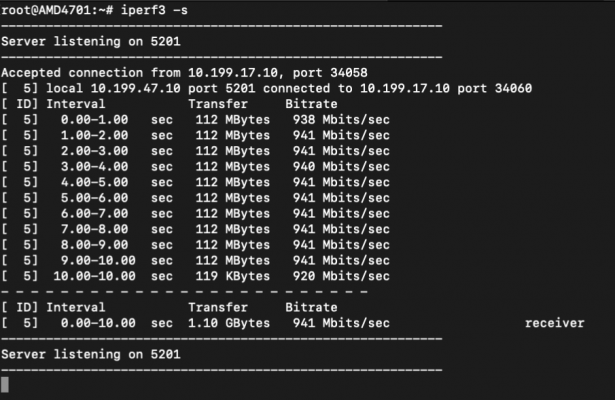

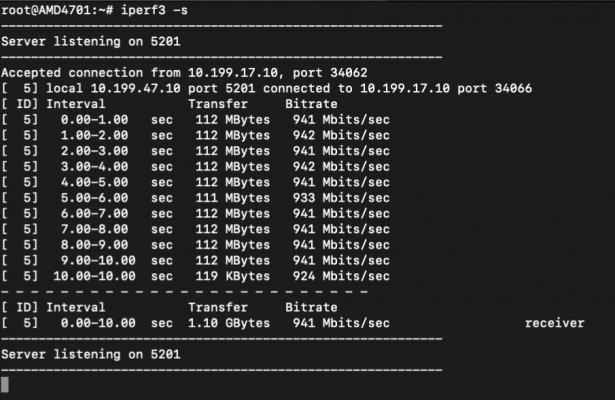

Last up was a simple iperf test to compare the bandwidth offered by the built-in ethernet interface and the realtek card in the PCIe packet switch board:

USB is on top, PCIe on bottom. Results are pretty much identical.

At this point I'm ready to add this to my cluster, get a few dozen VM's going and see how it handles over the next few weeks.

Last edited:

Interesting. I did not even know that these PCIe multipliers existed (no, I have never mined). I wonder how they work if you have add a GPU which requires continuous dedicated bandwidth. Also are these available today ? I found this but for some reason they say it cannot be attached directly to the motherboard -This is a 1x slot PCIe 2.0 x1 to 4x slot daughter board, based on the ASMedia 1184e PCIe 2.0 Packet Switch: https://www.asmedia.com.tw/product/556yQ9dSX7gP9Tuf/b7FyQBCxz2URbzg0

rsaeon

Innovator

Yes, they are available — I got mine during the Prime Day sale last weekend. It's only the x1 extension cables that I had from before.

That Waveshare expansion board was the first one I considered because of price but the documentation is unclear. Apparently the 5v line backflows back into the 12v line so if you're going to power it through the floppy molex connector, it should be 12v only and not both 5v and 12v. The cryptic warning about not plugging it in directly into a regular motherboard probably has to do with the orientation of the slots, you wouldn't be able to use them with the pci slots of your cabinet.

I had seen these extender cards/boards months ago, but I wrote them off as questionable chinese electronics and they are to some extent. But after I found that the same chip, ASMedia 1184e, was used by Asus in a few of their motherboards (including one that I own and use everyday) I understood it had to be a refined/stable solution for adding more low bandwith PCIe devices.

That Waveshare expansion board was the first one I considered because of price but the documentation is unclear. Apparently the 5v line backflows back into the 12v line so if you're going to power it through the floppy molex connector, it should be 12v only and not both 5v and 12v. The cryptic warning about not plugging it in directly into a regular motherboard probably has to do with the orientation of the slots, you wouldn't be able to use them with the pci slots of your cabinet.

I had seen these extender cards/boards months ago, but I wrote them off as questionable chinese electronics and they are to some extent. But after I found that the same chip, ASMedia 1184e, was used by Asus in a few of their motherboards (including one that I own and use everyday) I understood it had to be a refined/stable solution for adding more low bandwith PCIe devices.

jdp861

Explorer

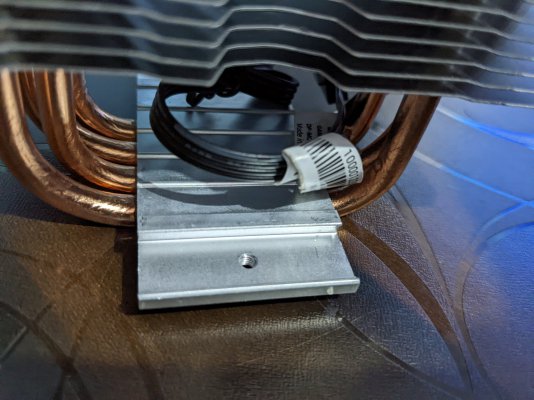

@SunnyBoi I got the adapter 3d printed but it doesn't solve my problem.I have shared a template for 115x to 4700 adapter if you revisit my posts on this thread. Go to a laser cutter and get it done.

Here are the issues with this approach:

If I use the mounting mechanism provided with the cooler then there is a gap between cpu and the cooler.

I cannot control the gap because the mounting screws of the cooler are fixed.

I also cannot reduce the height of the mounting bracket from the motherboard as it would cause the screws to touch the board.

What I need here is a bracket that is specifically designed for this cooler.So I can adjust the height of the cooler as required.

Attachments

rsaeon

Innovator

Nice, that 3D printed bracket turned out a lot better than I thought it would! Is that 100% infill? Did you try stressing it?

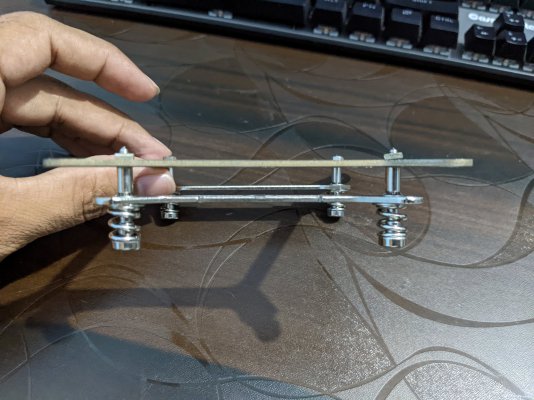

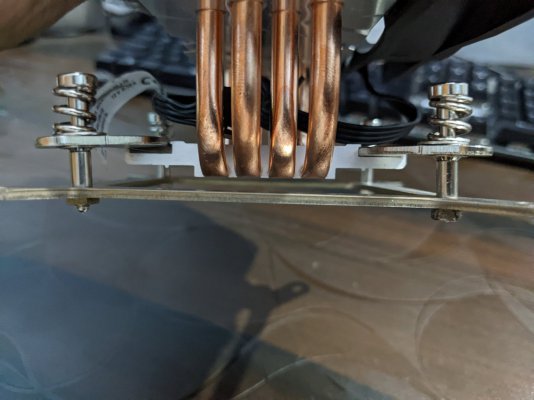

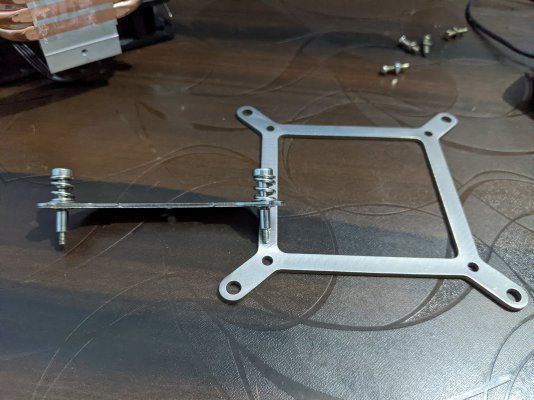

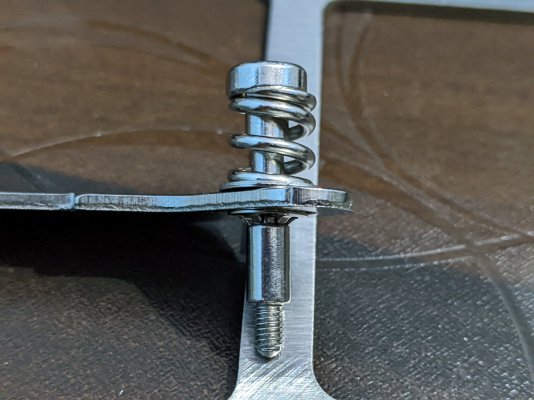

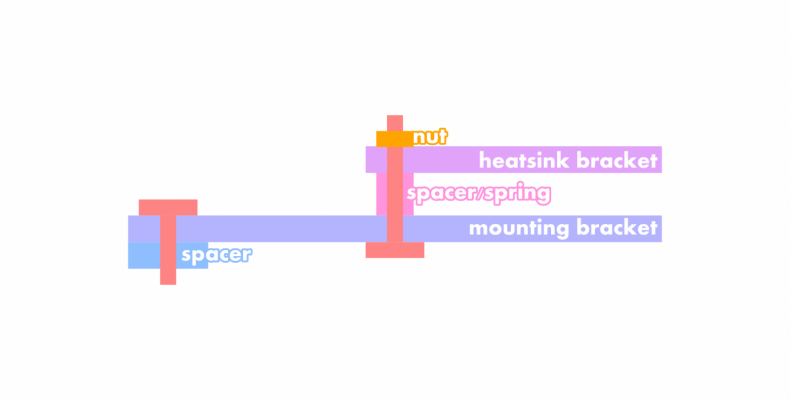

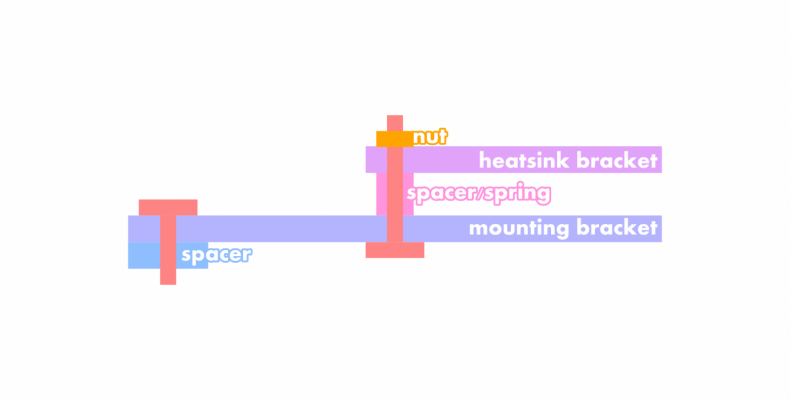

I wouldn't be using the original mounting screw/bolts/nuts — I''m planning on something like this:

If there's still space between the bottom of the cooler and the processor, it'll have to be filled in with a copper shim.

It may be easier to remove those screws or use a different cooler than to make a custom bracket specific to that cooler.

EDIT:

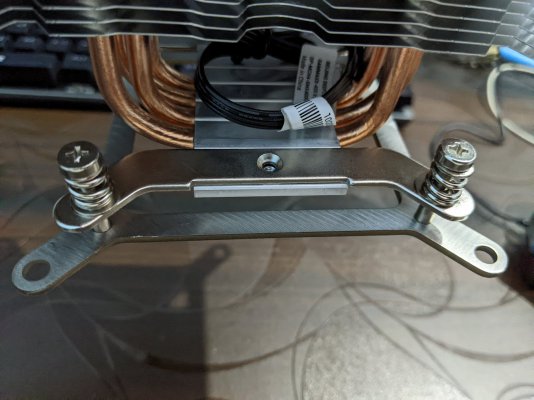

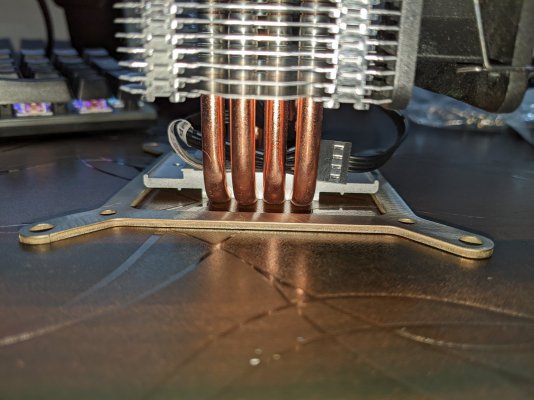

So I've discovered the mounting holes almost line up (within 1mm) with the AM3/AM4 bracket comes with the Deepcool GAMMAX 400 cooler:

This opens up the possibility of using these brackets to hold down coolers that come with a square rotatable bracket, like Deepcool's AK400 (pictured here screwed into the black backplate):

Of course this means you'll need the mounting hardware for a cooler you wouldn't be using, so this solution is not universal — but that GAMMAX 400 can still be used with Intel processors so it's not a complete loss.

But then again, if you're able to get @SunnyBoi's custom bracket made/cut then the AK400 above would work nicely.

I wouldn't be using the original mounting screw/bolts/nuts — I''m planning on something like this:

If there's still space between the bottom of the cooler and the processor, it'll have to be filled in with a copper shim.

I cannot control the gap because the mounting screws of the cooler are fixed.

It may be easier to remove those screws or use a different cooler than to make a custom bracket specific to that cooler.

EDIT:

So I've discovered the mounting holes almost line up (within 1mm) with the AM3/AM4 bracket comes with the Deepcool GAMMAX 400 cooler:

This opens up the possibility of using these brackets to hold down coolers that come with a square rotatable bracket, like Deepcool's AK400 (pictured here screwed into the black backplate):

Of course this means you'll need the mounting hardware for a cooler you wouldn't be using, so this solution is not universal — but that GAMMAX 400 can still be used with Intel processors so it's not a complete loss.

But then again, if you're able to get @SunnyBoi's custom bracket made/cut then the AK400 above would work nicely.

Last edited:

jdp861

Explorer

The mount is pretty solid. I won't worry about it. It fits perfectly. Sunnyboi did a very good job in designing it.Nice, that 3D printed bracket turned out a lot better than I thought it would! Is that 100% infill? Did you try stressing it?

I don't think they can be removed unless I wish to break them.It may be easier to remove those screws or use a different cooler than to make a custom bracket specific to that cooler.

EDIT:

So I've discovered the mounting holes almost line up (within 1mm) with the AM3/AM4 bracket comes with the Deepcool GAMMAX 400 cooler:

View attachment 141328

Yes, They do line up ok. I have also checked them.

This opens up the possibility of using these brackets to hold down coolers that come with a square rotatable bracket, like Deepcool's AK400 (pictured here screwed into the black backplate):

View attachment 141329

Of course this means you'll need the mounting hardware for a cooler you wouldn't be using, so this solution is not universal — but that GAMMAX 400 can still be used with Intel processors so it's not a complete loss.

But then again, if you're able to get @SunnyBoi's custom bracket made/cut then the AK400 above would work nicely.

We may be able to get it working by using the AM4 brackets from GAMMAX and mounting AK400 on them(if it fits). But I Highly doubt it. I don't think the holes would line up

For me, if I can get the intel mounting bracket of Gammax fabricated(to get it without the screws as in pic8 in previous comment) and then use that with sunnyboi's mounting bracket, it will do the job then.