Nvidia 4000 series cards

- Thread starter kartikoli

- Start date

-

- Tags

- gpu pricing

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Well well well:de8uer has launched his video for 4090 and interesting facts , the power limit for 4090 is stupid and as per him if someone reduces the power to to 50 -60 % the performance drop is only to 5 to 6 frames which is typical of TSMC chips and the power util dropssby almost 120 to 150 watts.

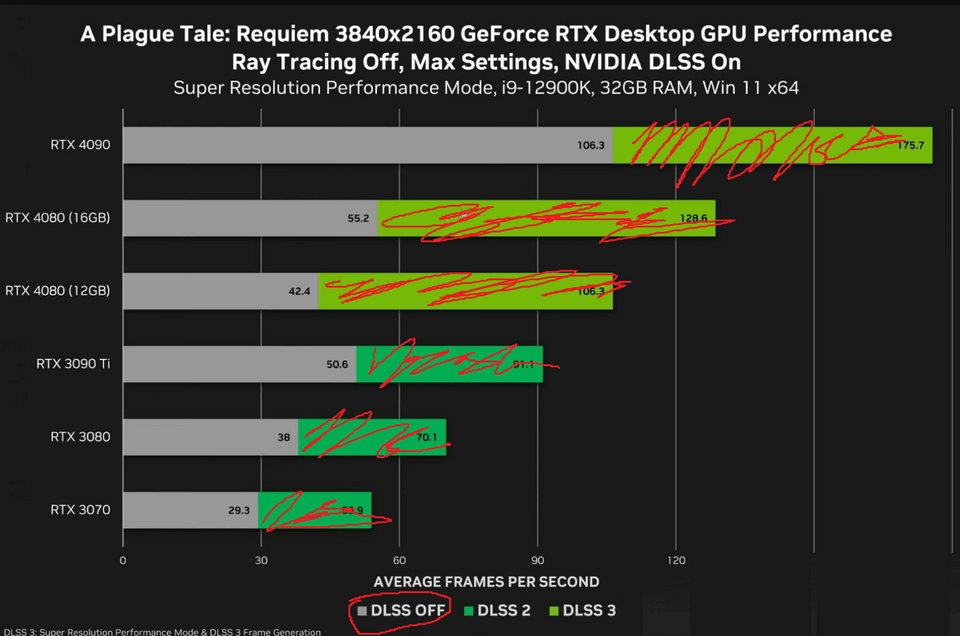

I'm disappointed in DLSS3.0 tho, just implementing MEMC tech and calling it "extra fps" isn't right in my books(Notice how there's always 50% boost in fps since it takes 2 frames and interpolated one frame, so 2:1=>50% extra frames). Imo that would be more effective for older cards but hey Nvidia said let's just lock it for the newer cards which already hit such high FPS numbers.I think we're at a really confusing point in time because the new ryzen CPUs and Nvidia GPUs can be run at 50% power and >90% of performance but hey, nobody driving a ferrari will go at a speed of 80 just to save fuel now, would they?

Source for ryzen was optimum tech's video and for Nvidia was rumors.

Also, the 4090 is a beast hands down, the raw rasterisation it offers is astonishing. But yeah it is definitely overkill and not having the option to run 4k 144hz uncompressed is a bummer.

cyborg007

Discoverer

Is this normal? Is this how it was when the 30 series was on sale?Material will be billed without GST invoice

Doesn't it do 4K 120Hz uncompressed? 24hz would make any meaningful visual difference?Also, the 4090 is a beast hands down, the raw rasterisation it offers is astonishing. But yeah it is definitely overkill and not having the option to run 4k 144hz uncompressed is a bummer.

The website has been showing it for sometime. Mostly not corrected. 3000 series was invoiced with GST to customersIs this normal? Is this how it was when the 30 series was on sale?

burntwingzZz

Innovator

AMD insider leakers have kind of confirmed that Amd will most proabably match the 4090 rasterization or maybe even surpass it at lower power consumption as well at lower price point .I hope it becomes truthWell well well:

I'm disappointed in DLSS3.0 tho, just implementing MEMC tech and calling it "extra fps" isn't right in my books(Notice how there's always 50% boost in fps since it takes 2 frames and interpolated one frame, so 2:1=>50% extra frames). Imo that would be more effective for older cards but hey Nvidia said let's just lock it for the newer cards which already hit such high FPS numbers.

Also, the 4090 is a beast hands down, the raw rasterisation it offers is astonishing. But yeah it is definitely overkill and not having the option to run 4k 144hz uncompressed is a bummer.

AMD GPU leaks used to be pretty unreliable. I remember the Vega hype they created and then bombed. Hope it’s different this timeAMD insider leakers have kind of confirmed that Amd will most proabably match the 4090 rasterization or maybe even surpass it at lower power consumption as well at lower price point .I hope it becomes truth

burntwingzZz

Innovator

rx68xx series isnt badAMD GPU leaks used to be pretty unreliable. I remember the Vega hype they created and then bombed. Hope it’s different this time

Here me out.

What if 4080 12GB is a replacement to 3060 12GB?

both are 192bit, both are 12GB and both are not available as FE cards.

Is there a possibility that 3060 12GB did not provide enough profit in absolute number because a profit% of already low-priced card is not as much as profit% of high-priced card?

OR given that higher number of transistors, CUDA cores, clock speed and TDP it was named 4080 instead of naming it 4060?

Also, how 3060 12GB was similar to 1080ti and few 2080 cards, Nvidia went with naming it 4080.

Just a speculation, not here defending Nvidia.

What if 4080 12GB is a replacement to 3060 12GB?

both are 192bit, both are 12GB and both are not available as FE cards.

Is there a possibility that 3060 12GB did not provide enough profit in absolute number because a profit% of already low-priced card is not as much as profit% of high-priced card?

OR given that higher number of transistors, CUDA cores, clock speed and TDP it was named 4080 instead of naming it 4060?

Also, how 3060 12GB was similar to 1080ti and few 2080 cards, Nvidia went with naming it 4080.

Just a speculation, not here defending Nvidia.

Its nice of you to think in that way but the answer is NO.Here me out.

What if 4080 12GB is a replacement to 3060 12GB?

both are 192bit, both are 12GB and both are not available as FE cards.

Is there a possibility that 3060 12GB did not provide enough profit in absolute number because a profit% of already low-priced card is not as much as profit% of high-priced card?

OR given that higher number of transistors, CUDA cores, clock speed and TDP it was named 4080 instead of naming it 4060?

Also, how 3060 12GB was similar to 1080ti and few 2080 cards, Nvidia went with naming it 4080.

Just a speculation, not here defending Nvidia.

Even a RTX 3060Ti cannot compar to RTX 4080 12 GB. Check the Cuda cores - almost double.

Then this -

3060 is way behind the race. Plus it will be a stupid move from Nvidia. Though these numbers for GPU that is 16GB. November is when we'll know the truth but one thing is for sure, that these are replacing 3080Ti and will be nearing or 95% of 3090Ti.

"OMG the 4090 performance is insane! I can't wait to get the 4080 16GB or 4080 12GB" -Famous last words

Even after so many people pointed out that the pricing and order of SKUs was insane, the realease of the 4090 made every youtuber say "That's worth it in terms of price to performance", now what are they going to say after looking at this? Where even is the incentive to get the 4080 16GB when you can basically get the 3090Ti for lower?

Also, the 4080 12GB is a $500 card(rebranded 4070), Nvidia has been playing around a lot with the bus widths but I don't think it was supposed to be a 4060.

I mean when you're paying that much it should matter ig.Doesn't it do 4K 120Hz uncompressed? 24hz would make any meaningful visual difference?

Haven't heard any reputable information but have heard RDNA3 should improve the already better efficiency so I'm all for that. As for performance even if it reaches 85-90% performance of the 4090 and is priced lower, AMD would gain some serious marketshare. But I don't see that happening cause if it performs 90% of the 4090 why won't they just list it for 90% of the price and make more profit themselves, in the end business is business and seeing how things were during the ryzen 5000 series launch, I think they're caring more about the profit instead of the end consumer/market share.AMD insider leakers have kind of confirmed that Amd will most proabably match the 4090 rasterization or maybe even surpass it at lower power consumption as well at lower price point .I hope it becomes truth

3060 ~ 2070 ~<1080Ti (The 1080Ti still gives the best performance but for worse efficiency and no RTX/DLSS, FSR and XeSS will change that too.)Also, how 3060 12GB was similar to 1080ti and few 2080 cards, Nvidia went with naming it 4080.

Just a speculation, not here defending Nvidia.

4080 12GB should fall in the range of (3090/3080Ti) but like I've said before, I do believe that it is just a rebranded 4070.

The performance is fine but what pains me is for the past generations it was always "Hey! New GPUs with better features for basically the same price! Isn't that insane how they're able to do that?" and now with linear price stacking there is no such excitement, only the fact that these are more efficient.

Last edited:

burntwingzZz

Innovator

The video that we were waiting for ,IF amd can improve on RTX and rasterization the throne is theirs. DLSS 3 is creating artifacts and are observable if the rastered frames are lower which could be cards like rtx 4050 4060 and so on

Give it some time.The video that we were waiting for ,IF amd can improve on RTX and rasterization the throne is theirs. DLSS 3 is creating artifacts and are observable if the rastered frames are lower which could be cards like rtx 4050 4060 and so on

Nah it should be the same as last year imo(7900xt between 4080 16GB and 4090, similar to 6900xt was between 3080 and 3090), if it beats the 4080 16GB and is priced for lower than $1200, then we can applaud AMD for competition (though I don't see that happening, money>feelings). The gen over gen improvement is going to be impressive but I personally don't think it'll reach the 3090.The video that we were waiting for ,IF amd can improve on RTX and rasterization the throne is theirs.

Yeah, DLSS 4.0 is where interpolation would be "viable" but I'll hold off until the community does tests where pure rasterization fps is 40fps (interpolated fps-->50% increase so 60fps) and interpolation gives playable fps without much latency addition. Additional FPS can also be added like they do in MEMC in smartphones so a theoretical 100% fps boost is also possible in DLSS 4.0.Give it some time.

New drivers are just out.Nah it should be the same as last year imo(7900xt between 4080 16GB and 4090, similar to 6900xt was between 3080 and 3090), if it beats the 4080 16GB and is priced for lower than $1200, then we can applaud AMD for competition (though I don't see that happening, money>feelings). The gen over gen improvement is going to be impressive but I personally don't think it'll reach the 3090.

Yeah, DLSS 4.0 is where interpolation would be "viable" but I'll hold off until the community does tests where pure rasterization fps is 40fps (interpolated fps-->50% increase so 60fps) and interpolation gives playable fps without much latency addition. Additional FPS can also be added like they do in MEMC in smartphones so a theoretical 100% fps boost is also possible in DLSS 4.0.

joyceanblue

Contributor

- Status

- Not open for further replies.