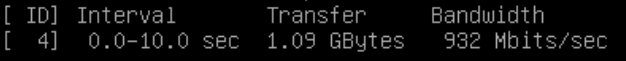

I remember now that It's not straightforward so here is a step by step way that I mounted a usb flash drive:

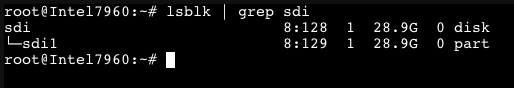

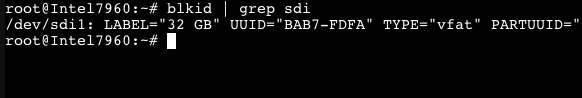

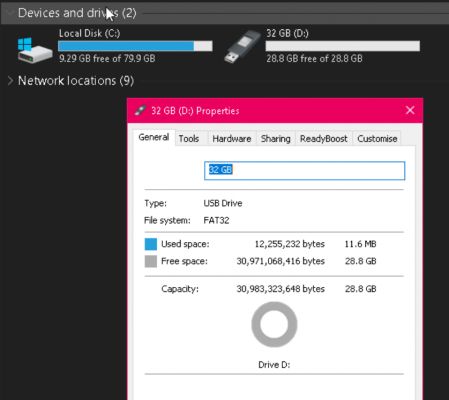

View attachment 121989

It's a 32GB drive,

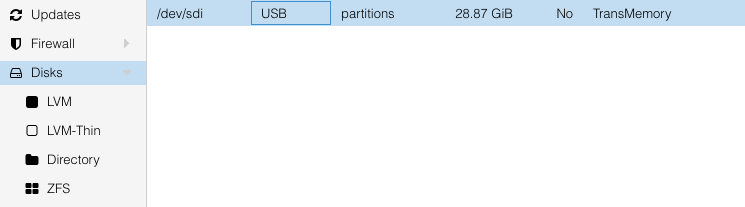

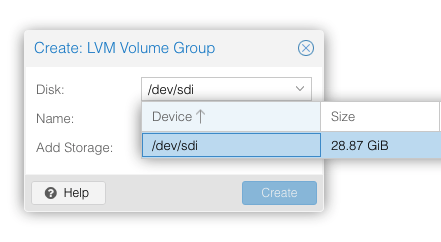

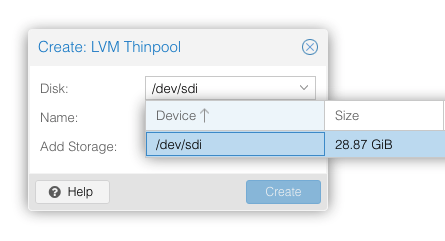

sdi. Here it is recognized in the disks page of that node:

View attachment 121990

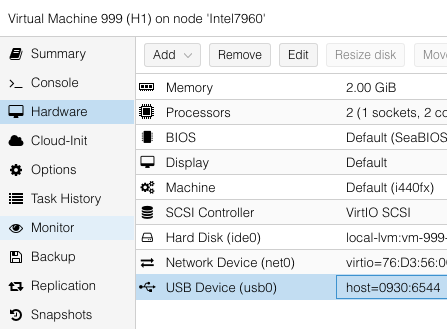

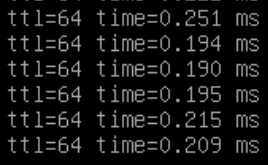

At this point, you can attach it directly to a VM, like here as a USB device:

View attachment 121991

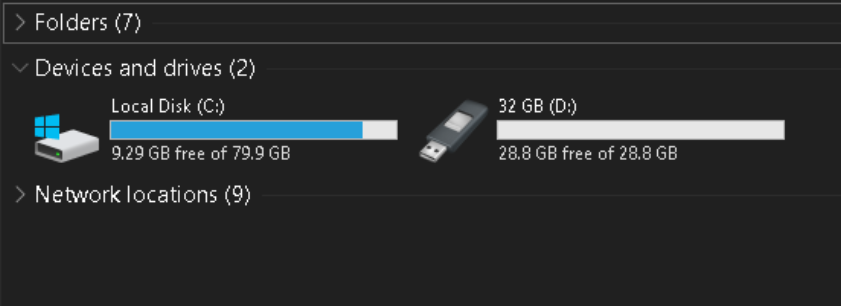

And it'll show up as if it was physically plugged in:

View attachment 121992

Or you could choose to pass the disk directly through it's UUID:

View attachment 121993

The UUID is usually very long, here it's just eight characters separated into two with a hyphen.

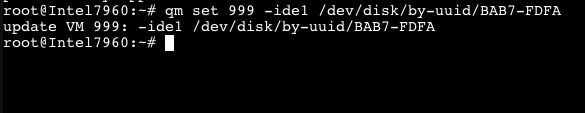

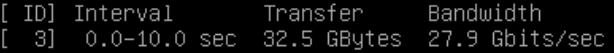

I prefer the UUID approach over serial number or any other designator. You can then attach it with a simple

qm set command:

View attachment 121994

999 is just the VMID.

I'm using

ide1 as the designator because it's a windows vm, for linux

scsi is a better choice. The number can be any number that isn't already in use. Here, I've already got

ide0 as the boot drive, so I chose

ide1:

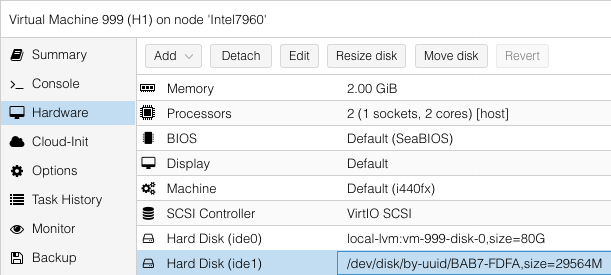

View attachment 121995

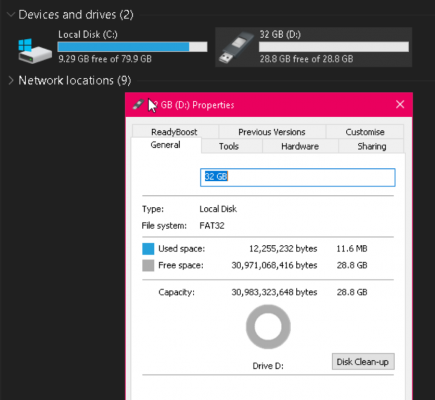

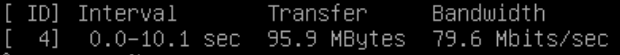

This is how you'd normally attach drives permanently. If the drive is not present on the host, then the VM won't start unless if you remove it from the hardware page. Here for this USB drive that I attached by UUID, windows sees it as a physical local disk:

View attachment 121996

Previously, when it was attached at USB device, this is how windows saw it:

View attachment 121998

If your goal is to setup a VM for media sharing, then this is the cleanest way to attach a drive that already has data, and to have that data accessible to the VM to be further shared on your home network. The VM would also be able to present the drive to your other computers as a network storage location.

There are other ways to attach storage, for example if you want it available across all nodes in a cluster. But that would be on the host side of Proxmox, and not available to any hosted VM's or containers. And if you want to use a single drive for multiple VM's, then you'll need to format the drive and have Proxmox manage the drive.

References:

This is a new WD drive I got last week. Works ok in windows .. and ubuntu vm ( via sata-usb connector )

This is a new WD drive I got last week. Works ok in windows .. and ubuntu vm ( via sata-usb connector )