Checkout ceph, integrates really well with proxmox.I have a large PC with 5600X, 32GB RAM, 2x nvme, 4x Optane 16GB, 6x HDDs. Also contains 1060 3GB.

It takes a lot of space as it is built in a CM Storm Scout 2 Advanced case.

It is also underutilized, the compute is overkill and storage is 30% used (around 5TB out of 16TB - drives are in RAIDZ2).

I have a network rack with around 15 gigabit ports on a switch unoccupied. It has some space which I can use for some tiny PCs like the P330 I bought from you.

Is there a configuration like Esxi/ProxMox where I can cluster these multiple tiny PCs to have redundancy (redundancy in compute isn't really necessary, just for storage)?

Currently, in Truenas, if a drive fails I can just replace it and rebuild the array. In case any drive fails, I would like the recovery to be quick and easy.

I don't need a discrete GPU as intel iGPU should be enough to do the job currently the 1060 does - transcoding for Jellyfin which is also rarely useful.

Thanks in advance!

Proxmox Thread - Home Lab / Virtualization

- Thread starter Party Monger

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Mann

Herald

Proxmox allow such configuration for redundancy. But there are copies of everything including the raid array.

rsaeon

Innovator

Is there a configuration like Esxi/ProxMox where I can cluster these multiple tiny PCs to have redundancy (redundancy in compute isn't really necessary, just for storage)?

Proxmox does have High Availability (HA) but it's for VM's running services and not backup/storage: https://pve.proxmox.com/wiki/High_Availability

You'll need shared storage and at least three nodes to make sure, for example, that there's a VM with pihole always available for your network clients. I haven't really ventured into this because of the shared storage and extra node requirements. I have set up a separate network for Corosync (keeping the nodes synced) and that itself required additional network ports on each node and a separate vlan/switch connecting the Corosync interfaces together.

For redundant storage, it's much easier to setup VM's with TrueNAS on each host and making sure they're all synced with each other. Personally, I have one NAS as my main storage server and all of the non-redownloadable data (keys, licenses, configuration files, documents) are synced to two other network devices with Syncthing (which is a two-way sync). I also have a manual one-way sync to another network device for these files that runs every night at 1am. I keep the last 30 days backups of each file and then one version for each month prior to that. This way, I have my important data in four locations and I have version history that goes back a few years.

That said, I have plans and I can come up with scenarios to make use of multiple Tiny PCs easily!

I just finished setting one up as a server running MQTT + NodeRed + InfluxDB + Grafana and I'm using that to monitor power consumption from a few of the smart plugs I have connected to my network. It's a simple i3 with 4GB of memory and a 250GB ssd, pulls under 10w and shows me nice graphs.

Another I'm setting up is a Proxmox Backup Server, which doesn't need a dedicated node on its own so I'll have a network share on that to store Ansible configuration files and other backups. Snapshots would be very helpful since it feels like I'm always breaking stuff when I intend to streamline things.

I'm also planning a media transcoding server with one of these machines, currently that role is being done by an old laptop. I don't use Youtube subscriptions, I just download new videos using youtube-dl that then get put into a plex library, so that can also be done on the transcoding server.

I want to get back into seeding linux distros (ha) or at least the OSS that I made use of, I used to do this when I was younger, and a TinyPC is perfect for this.

One very important todo is a caching server — we use a ton of bandwidth (over 5TB each month), so it would be nice to cache software/app updates since they consume a lot of bandwith.

The list goes on and on — like my own self hosted password manager, but that is for later once I have an offsite backup as part of my network.

For the last year or so, I've been chasing 99.9% uptime (9 hours of downtime per year) — just for the personal satisfaction aspect of achieving it with second hand batteries and non-enterprise equipment:

| Availability % | Downtime per year |

|---|---|

| 99 | 3.65 days |

| 99.9 | 8.76 hours |

| 99.99 | 52.56 minutes |

| 99.999 | 5.26 minutes |

| 99.9999 | 31.5 seconds |

| 99.99999 | 3.15 seconds |

Last edited:

Decadent_Spectre

Forerunner

Proxmox does have High Availability (HA) but it's for VM's running services and not backup/storage: https://pve.proxmox.com/wiki/High_Availability

You'll need shared storage and at least three nodes to make sure, for example, that there's a VM with pihole always available for your network clients. I haven't really ventured into this because of the shared storage and extra node requirements. I have set up a separate network for Corosync (keeping the nodes synced) and that itself required additional network ports on each node and a separate vlan/switch connecting the Corosync interfaces together.

For redundant storage, it's much easier to setup VM's with TrueNAS on each host and making sure they're all synced with each other. Personally, I have one NAS as my main storage server and all of the non-redownloadable data (keys, licenses, configuration files, documents) are synced to two other network devices with Syncthing (which is a two-way sync). I also have a manual one-way sync to another network device for these files that runs every night at 1am. I keep the last 30 days backups of each file and then one version for each month prior to that. This way, I have my important data in four locations and I have version history that goes back a few years.

That said, I have plans and I can come up with scenarios to make use of multiple Tiny PCs easily!

I just finished setting one up as a server running MQTT + NodeRed + InfluxDB + Grafana and I'm using that to monitor power consumption from a few of the smart plugs I have connected to my network. It's a simple i3 with 4GB of memory and a 250GB ssd, pulls under 10w and shows me nice graphs.

Another I'm setting up is a Proxmox Backup Server, which doesn't need a dedicated node on its own so I'll have a network share on that to store Ansible configuration files and other backups. Snapshots would be very helpful since it feels like I'm always breaking stuff when I intend to streamline things.

I'm also planning a media transcoding server with one of these machines, currently that role is being done by an old laptop. I don't use Youtube subscriptions, I just download new videos using youtube-dl that then get put into a plex library, so that can also be done on the transcoding server.

I want to get back into seeding linux distros (ha) or at least the OSS that I made use of, I used to do this when I was younger, and a TinyPC is perfect for this.

One very important todo is a caching server — we use a ton of bandwidth (over 5TB each month), so it would be nice to cache software/app updates since they consume a lot of bandwith.

The list goes on and on — like my own self hosted password manager, but that is for later once I have an offsite backup as part of my network.

For the last year or so, I've been chasing 99.9% uptime (9 hours of downtime per year) — just for the personal satisfaction aspect of achieving it with second hand batteries and non-enterprise equipment:

Availability % Downtime per year 99 3.65 days 99.9 8.76 hours 99.99 52.56 minutes 99.999 5.26 minutes 99.9999 31.5 seconds 99.99999 3.15 seconds

You often post about your network/servers, I am curious is this all for personal use? Also is the 5TB/month for personal use or are both these for work? Also what ISP do you use for 5TB/month? IIRC most have a 3.3TB limit for FUP.

rsaeon

Innovator

None of this is technically for work — but it has become a source of income over the years.

I have a few Proxmox clusters, one for the home network (pihole/adguard, plex, power monitoring, automation), one for my personal use (nas/backup, vms), one for chia farming and one for passive income by offering cpu cycles and/or bandwidth to companies that do web scraping and price comparisons. I lease a few subnets from providers abroad and route docker containers that I run at home through multiple fiber connections through those subnets.

I have a few Airtel connections, they all have the 3.3TB FUP so I rotate between them throughout the month.

Quoting some of my older posts here:

That's the backstory.

But I no longer use dedicated VM's for this, everything is on docker now.

And I'm no longer running those specific apps.

Someday I'll share progression photos but this is how it all started:

I have a few Proxmox clusters, one for the home network (pihole/adguard, plex, power monitoring, automation), one for my personal use (nas/backup, vms), one for chia farming and one for passive income by offering cpu cycles and/or bandwidth to companies that do web scraping and price comparisons. I lease a few subnets from providers abroad and route docker containers that I run at home through multiple fiber connections through those subnets.

I have a few Airtel connections, they all have the 3.3TB FUP so I rotate between them throughout the month.

Quoting some of my older posts here:

About two or three years ago, I started collecting DDR3 motherboards to add to my proxmox cluster. These boards go into systems with 120GB SSDs and 32GB of memory to serve as virtualization hosts for the plethora of passive income apps/services that payout through cryptocurrency. The earnings per vm is very low, but as with everything that's a grind, it's a numbers game. 1 VM barely earns anything, but 100 VM's? It becomes interesting.

That's the backstory.

I have a passive income farm of android & windows vm's running a few apps that people recommend at https://www.reddit.com/r/passive_income/ and other subreddits. I'm being intentionally vague about the specifics because demand is far less than supply.

I liked the idea of investing in hardware to earn money without actually doing any physical work. I started with mining about eight years ago, but electricity costs take away a significant portion of your earnings and add an incredible amount of heat and noise. My first mining rig was 4x 6950's with a Sempron 145 in 2012. I made enough when bitcoin hit $1000 but since then I've grown averse to the entire concept of mining with computer hardware.

But I still wanted to pursue the idea of passive income so I looked up what people were doing with phone farms, and stepped into virtual machines not long ago. A 3rd/4th gen quad core system paired with 32gb of ram can host 20 vm's, each of which can potentially earn Rs 100-150 per month these days.

But I no longer use dedicated VM's for this, everything is on docker now.

It was/is a proxmox cluster running low spec Windows 10 VM's (single core with 1GB ram) with passive income data sharing apps like Packetstream, IPRoyal and Honeygain.

And I'm no longer running those specific apps.

Someday I'll share progression photos but this is how it all started:

Decadent_Spectre

Forerunner

That's a very interesting story. I appreciate you taking the time to answer. I look forward to the pictures of progression.

I would very much like to know this in detail. Can i DM u to discuss.None of this is technically for work — but it has become a source of income over the years.

I have a few Proxmox clusters, one for the home network (pihole/adguard, plex, power monitoring, automation), one for my personal use (nas/backup, vms), one for chia farming and one for passive income by offering cpu cycles and/or bandwidth to companies that do web scraping and price comparisons. I lease a few subnets from providers abroad and route docker containers that I run at home through multiple fiber connections through those subnets.

I have a few Airtel connections, they all have the 3.3TB FUP so I rotate between them throughout the month.

Quoting some of my older posts here:

That's the backstory.

But I no longer use dedicated VM's for this, everything is on docker now.

And I'm no longer running those specific apps.

Someday I'll share progression photos but this is how it all started:

Proxmox allow such configuration for redundancy. But there are copies of everything including the raid array.

The VM's will only likely run some photoprism instances, syncthing for syncing data from my phone, probably nextcloud. All of these host the non-downloadable data so need redundancy for sure.Proxmox does have High Availability (HA) but it's for VM's running services and not backup/storage: https://pve.proxmox.com/wiki/High_Availability

You'll need shared storage and at least three nodes to make sure, for example, that there's a VM with pihole always available for your network clients. I haven't really ventured into this because of the shared storage and extra node requirements. I have set up a separate network for Corosync (keeping the nodes synced) and that itself required additional network ports on each node and a separate vlan/switch connecting the Corosync interfaces together.

The nginx reverse proxy, adguard, etc is handled by the opnsense router (P330 with dual intel nics) itself. Should be fine there.

On the other hand, the Jellyfin and minecraft servers do not need storage redundancy. Movies can be downloaded (although pita but how many movie sessions will you miss while waiting for a download?) and minecraft server gets backed up to github (yeah, bite me) every night or so.

I am thinking, since I only need around 2-3TB of redundant storage. 1 copy on a tiny PC (runs 24x7), 1 copy on my gaming PC (syncs when I start it), 1 copy on a Dell Wyse box with external HDD (4TB).

rsaeon

Innovator

photoprism

Thank you for this, I'm adding this to the list of things I want to explore! Most of my photos from the last few years are sitting around in a "to-be-edited" folder for processing in Lightroom before I put them into albums. Photoprism sounds exciting.

nginx reverse proxy

I've been reading about this to get signed certs for local services. Is that what you're using it for?

On the other hand, the Jellyfin and minecraft servers do not need storage redundancy.

I stuck with plex because I thought I could get it working outside my home network with a paid subscription but with Tailscale, I don't need that feature and can finally look into to Jellyfin.

I don't have much media outside of youtube videos that need to be watched before being deleted.

I went on a popculture 'cleanse' of sorts about ten years ago and completely removed movies, tvshows and music from my life — it's been enlightening. I used to obsess over coverart, metadata, procuring 40GB blurays and today I have absolutely no media saved or streamed. I found that I didn't have the inclination to sit though episodes or movies anymore and music never really helped with anything for me. Often, I would skim through the plot section of a wikipedia page because I was bored with how things were progressing in the actual movie/episode. With no movies and tvshows in my day, I found a lot more time to do other stuff too, haha.

I do read a lot though, so I have bunch of ebooks saved somewhere.

Yes and no. Instead of having to expose ports for all web apps, I only expose 80 and 443, reverse proxy handles the rest. If deployed through docker, it does have option to auto-generate and renew certificates (via Let's Encrypt). The way I use it - deployed inside opnsense as a plugin - needs another plugin called ACME for certs. Integrates really well, although config is a bit cumbersome compared to docker.I've been reading about this to get signed certs for local services. Is that what you're using it for?

That is exactly how I use. It wasn't easy to get Let's Encrypt and NGINX both running on Opnsense (at least to me). But after it has been setup, scaling to multiple hosts/services within network is very easy.Yes and no. Instead of having to expose ports for all web apps, I only expose 80 and 443, reverse proxy handles the rest. If deployed through docker, it does have option to auto-generate and renew certificates (via Let's Encrypt). The way I use it - deployed inside opnsense as a plugin - needs another plugin called ACME for certs. Integrates really well, although config is a bit cumbersome compared to docker.

jinx

Forerunner

If you are running docker, I'd recommend looking at nginx proxy manager. It's super easy to set up(just copy the compose file from GitHub and do docker compose up), comes with a nice web UI and handles certs. I use the DNS challenge to get valid SSL certs for all my local services.

Doesn't DNS challenge require the hosting provider's API or am I confusing that with Dynamic DNS?

jinx

Forerunner

DNS hosting provider's API - yes. DNS challenge requires you to add a TXT record to the domain's DNS and certbot (used by the nginx proxy manager internally) automates it using the API key.Doesn't DNS challenge require the hosting provider's API or am I confusing that with Dynamic DNS?

I use Cloudflare to manage all my domains. Never had any issues with them.

Is there a configuration like Esxi/ProxMox where I can cluster these multiple tiny PCs to have redundancy (redundancy in compute isn't really necessary, just for storage)?

There are, the only issue is performance. For homelab use, not an issue.

AwAcS

Beginner

Hi,

I am not sure if this would be the right place to ask questions but I have been struggling with a few things with proxmox and my Dell Optiplex 3070 Micro.

So far as Internet (router) is concerned, I am using the one provided by my ISP only.

The hardware I am using is a Mini PC (Dell Optiplex 3070) with i3 9100T, 24 gb Ram, 512gb sata m.2 ssd and another sata 2.5inch HDD. (HDD is formatted in EXT4)

I have installed proxmox on the m.2 ssd with a 100gb partition and running following VM/LXC

1. VM-Home Assistant (64gb storage in M.2 SSD & 3GB Ram)

2. VM- Open Media Vault (6gb Ram & 40gb storage for OS and another 1.5TB (HDD) thin allocation for data). Currently running qBittorrent and Jellyfin as docker compose.

3. VM- Nextcloud (8gb Ram & 40GB for OS & 512 gb storage in HDD) The 512gb for storage is formatted in ZFS as I did not have much choice while installing. Installed a pre-installed V from hanssonit

4. LXC- Cloudflared: (1gb ram & 8 gb storage in ssd)

Cloud flare LXC is used to provide tunnel to nextcloud, qBittorrent, proxmox and Jellyfin. I have installed another cloudflare addon with home assistant for redundancy.

Coming to the questions (Kindly bear with me)

1. Foremost concern is regarding if my method of installation is correct with regard to storage /formatting / distribution of HDD. I have read most Homelab people separate the compute units and data storage (mostly NAS).

2. The data of HDD is currently not backed up. What solution should I opt - USB External Storage (but how to take data from open media vault & Nextcloud at the same time) or to deploy another vm with Proxmox Backup Server (which again would use another external storage) or to consider spending money on another mini PC to build a NAS with Open Media Vault. (Don't want to spend for another computer and want to get things accomplished). How to go about data backup?

Although I am trying to keep the crucial Vms (Home Assistant & Nextcloud) Backed up in an external USB HDD at regular intervals by mounting the said drive (as the same is in ntfs and contains other data too) when backing up, the said process is lengthy.

I want this Proxmox instance to be deploy and forget (Apart from timely upgrades) while keeping data backed up. (Trying not to spend too much money but I understand I will have to spend some. Atleast to get a dedicated external storage which I currently do not have.)

I am not sure if this would be the right place to ask questions but I have been struggling with a few things with proxmox and my Dell Optiplex 3070 Micro.

So far as Internet (router) is concerned, I am using the one provided by my ISP only.

The hardware I am using is a Mini PC (Dell Optiplex 3070) with i3 9100T, 24 gb Ram, 512gb sata m.2 ssd and another sata 2.5inch HDD. (HDD is formatted in EXT4)

I have installed proxmox on the m.2 ssd with a 100gb partition and running following VM/LXC

1. VM-Home Assistant (64gb storage in M.2 SSD & 3GB Ram)

2. VM- Open Media Vault (6gb Ram & 40gb storage for OS and another 1.5TB (HDD) thin allocation for data). Currently running qBittorrent and Jellyfin as docker compose.

3. VM- Nextcloud (8gb Ram & 40GB for OS & 512 gb storage in HDD) The 512gb for storage is formatted in ZFS as I did not have much choice while installing. Installed a pre-installed V from hanssonit

4. LXC- Cloudflared: (1gb ram & 8 gb storage in ssd)

Cloud flare LXC is used to provide tunnel to nextcloud, qBittorrent, proxmox and Jellyfin. I have installed another cloudflare addon with home assistant for redundancy.

Coming to the questions (Kindly bear with me)

1. Foremost concern is regarding if my method of installation is correct with regard to storage /formatting / distribution of HDD. I have read most Homelab people separate the compute units and data storage (mostly NAS).

2. The data of HDD is currently not backed up. What solution should I opt - USB External Storage (but how to take data from open media vault & Nextcloud at the same time) or to deploy another vm with Proxmox Backup Server (which again would use another external storage) or to consider spending money on another mini PC to build a NAS with Open Media Vault. (Don't want to spend for another computer and want to get things accomplished). How to go about data backup?

Although I am trying to keep the crucial Vms (Home Assistant & Nextcloud) Backed up in an external USB HDD at regular intervals by mounting the said drive (as the same is in ntfs and contains other data too) when backing up, the said process is lengthy.

I want this Proxmox instance to be deploy and forget (Apart from timely upgrades) while keeping data backed up. (Trying not to spend too much money but I understand I will have to spend some. Atleast to get a dedicated external storage which I currently do not have.)

1. Not necessarily, I have seen many using just one pc for all their tasks. But you have to keep in mind that it is either a full fledged pc or a SFF pc with at least 3 storage slots(1 for boot and VMs + 2 in Raid1 as storage). Since optiplex micros are very efficient, powerful and has a very small footprint, a huge chunk of people use them in their homelabs, mainly for computing since they don't have much scope of upgradability in terms of storage and pcie expansions but are fantastic for VMs and in a proxmox cluster.Hi,

I am not sure if this would be the right place to ask questions but I have been struggling with a few things with proxmox and my Dell Optiplex 3070 Micro.

So far as Internet (router) is concerned, I am using the one provided by my ISP only.

The hardware I am using is a Mini PC (Dell Optiplex 3070) with i3 9100T, 24 gb Ram, 512gb sata m.2 ssd and another sata 2.5inch HDD. (HDD is formatted in EXT4)

I have installed proxmox on the m.2 ssd with a 100gb partition and running following VM/LXC

1. VM-Home Assistant (64gb storage in M.2 SSD & 3GB Ram)

2. VM- Open Media Vault (6gb Ram & 40gb storage for OS and another 1.5TB (HDD) thin allocation for data). Currently running qBittorrent and Jellyfin as docker compose.

3. VM- Nextcloud (8gb Ram & 40GB for OS & 512 gb storage in HDD) The 512gb for storage is formatted in ZFS as I did not have much choice while installing. Installed a pre-installed V from hanssonit

4. LXC- Cloudflared: (1gb ram & 8 gb storage in ssd)

Cloud flare LXC is used to provide tunnel to nextcloud, qBittorrent, proxmox and Jellyfin. I have installed another cloudflare addon with home assistant for redundancy.

Coming to the questions (Kindly bear with me)

1. Foremost concern is regarding if my method of installation is correct with regard to storage /formatting / distribution of HDD. I have read most Homelab people separate the compute units and data storage (mostly NAS).

2. The data of HDD is currently not backed up. What solution should I opt - USB External Storage (but how to take data from open media vault & Nextcloud at the same time) or to deploy another vm with Proxmox Backup Server (which again would use another external storage) or to consider spending money on another mini PC to build a NAS with Open Media Vault. (Don't want to spend for another computer and want to get things accomplished). How to go about data backup?

Although I am trying to keep the crucial Vms (Home Assistant & Nextcloud) Backed up in an external USB HDD at regular intervals by mounting the said drive (as the same is in ntfs and contains other data too) when backing up, the said process is lengthy.

I want this Proxmox instance to be deploy and forget (Apart from timely upgrades) while keeping data backed up. (Trying not to spend too much money but I understand I will have to spend some. Atleast to get a dedicated external storage which I currently do not have.)

2. I would suggest buying an old pc with good number of sata ports and make a NAS out of it, it doesn't have to be too powerful, even a pentium or an i3 could do the job, this would be a solution for the long run. A short term solution would be to just buy an external HDD and do timely backups on it, you could use things like duplicacy.

1. Foremost concern is regarding if my method of installation is correct with regard to storage /formatting / distribution of HDD. I have read most Homelab people separate the compute units and data storage (mostly NAS).

Depends on how much you want to replicate enterprise environments. Budget for homelabs are not going to be great.

2. The data of HDD is currently not backed up. What solution should I opt - USB External Storage (but how to take data from open media vault & Nextcloud at the same time) or to deploy another vm with Proxmox Backup Server (which again would use another external storage) or to consider spending money on another mini PC to build a NAS with Open Media Vault. (Don't want to spend for another computer and want to get things accomplished). How to go about data backup?

If the data is lesser than 1TB - then look at onedrive with rclone.

Although I am trying to keep the crucial Vms (Home Assistant & Nextcloud) Backed up in an external USB HDD at regular intervals by mounting the said drive (as the same is in ntfs and contains other data too) when backing up, the said process is lengthy.

Dont first backup the VMs - this is the biggest mistake. You can trade time for repair in homelabs, so first backup the configurations. Once done, all you need to is recreate the same. Another advantage is you keep learning to deploy quickly. If you can backup the entire VM with space then do it next.

I want this Proxmox instance to be deploy and forget (Apart from timely upgrades) while keeping data backed up. (Trying not to spend too much money but I understand I will have to spend some. Atleast to get a dedicated external storage which I currently do not have.)

Usually its like that only

AwAcS

Beginner

Thank you for your reply. Could you kindly elaborate on "backup the configuration"? With Home Assistant, I understand I can use the backup option of Home Assistant itself and while restoring, I can simply restore on a new instance, but would that work with nextcloud as well?Dont first backup the VMs - this is the biggest mistake. You can trade time for repair in homelabs, so first backup the configurations. Once done, all you need to is recreate the same. Another advantage is you keep learning to deploy quickly. If you can backup the entire VM with space then do it next.

Usually its like that only

rsaeon

Innovator

Hi,

I am not sure if this would be the right place to ask questions but I have been struggling with a few things with proxmox and my Dell Optiplex 3070 Micro.

So far as Internet (router) is concerned, I am using the one provided by my ISP only.

The hardware I am using is a Mini PC (Dell Optiplex 3070) with i3 9100T, 24 gb Ram, 512gb sata m.2 ssd and another sata 2.5inch HDD. (HDD is formatted in EXT4)

I have installed proxmox on the m.2 ssd with a 100gb partition and running following VM/LXC

1. VM-Home Assistant (64gb storage in M.2 SSD & 3GB Ram)

2. VM- Open Media Vault (6gb Ram & 40gb storage for OS and another 1.5TB (HDD) thin allocation for data). Currently running qBittorrent and Jellyfin as docker compose.

3. VM- Nextcloud (8gb Ram & 40GB for OS & 512 gb storage in HDD) The 512gb for storage is formatted in ZFS as I did not have much choice while installing. Installed a pre-installed V from hanssonit

4. LXC- Cloudflared: (1gb ram & 8 gb storage in ssd)

Cloud flare LXC is used to provide tunnel to nextcloud, qBittorrent, proxmox and Jellyfin. I have installed another cloudflare addon with home assistant for redundancy.

Coming to the questions (Kindly bear with me)

1. Foremost concern is regarding if my method of installation is correct with regard to storage /formatting / distribution of HDD. I have read most Homelab people separate the compute units and data storage (mostly NAS).

2. The data of HDD is currently not backed up. What solution should I opt - USB External Storage (but how to take data from open media vault & Nextcloud at the same time) or to deploy another vm with Proxmox Backup Server (which again would use another external storage) or to consider spending money on another mini PC to build a NAS with Open Media Vault. (Don't want to spend for another computer and want to get things accomplished). How to go about data backup?

Although I am trying to keep the crucial Vms (Home Assistant & Nextcloud) Backed up in an external USB HDD at regular intervals by mounting the said drive (as the same is in ntfs and contains other data too) when backing up, the said process is lengthy.

I haven't used ZFS with Proxmox, so I can't share any advice relating to that, but I have used Proxmox extensively the last few years and have set up a dozen or so nodes.

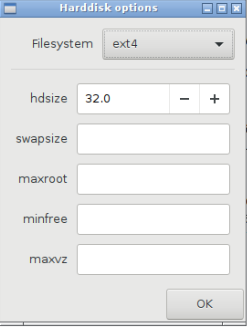

I would have simplified things greatly by skipping partitions. I'd have given the entire SSD to proxmox to use, but use custom settings for the disk configuration step:

I always have swap disabled, so 0 for that, and maxroot is usually 16G — just enough to have one disk image that I might need to make new VM's. I store my disk images on a network location and bring them in through scp whenever I need to setup a new vm so my proxmox nodes are as lean as possible. I set minfree as 16G as well, to prolong SSD life. The last setting, maxvz is unset, allowing the rest of the SSD to be used as VM storage through the automatically created LVM Thin Pool.

The second drive, I would have passed that entire drive to the Open Media Vault VM. I haven't personally used nextcloud but it looks like you can install it inside the OMV vm with docker, so the entire second hard drive can be used by both OMV and nextcloud without being constrained by partitions.

Any backups that you would need now with OMV managing the second drive, can be done inside OMV itself. For the OMV vm configuration and other VM's, Proxmox Backup Server should work fine in a vm with a USB drive passed to it. It barely uses any resources.

I want this Proxmox instance to be deploy and forget (Apart from timely upgrades) while keeping data backed up. (Trying not to spend too much money but I understand I will have to spend some. Atleast to get a dedicated external storage which I currently do not have.)

Proxmox does need monitoring — I run three clusters and I need to check them daily. Stuff still falls through whatever monitoring I do, like the other day I found out that my secondary adblocker was offline and I don't even know for how many days. I just set up portainer today (along with adguardhome-sync) to keep track of these little things.