I have for more than a month now. I have used with Nvidia adapter and custom cables. No issues. I know 5 other 4090 users in India who don't have any issues as wellHas anyone purchased a 4090 yet? Wanted to know if anyone here had a melting connector issue

Nvidia 4000 series cards

- Thread starter kartikoli

- Start date

-

- Tags

- gpu pricing

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

animishticomb

Forerunner

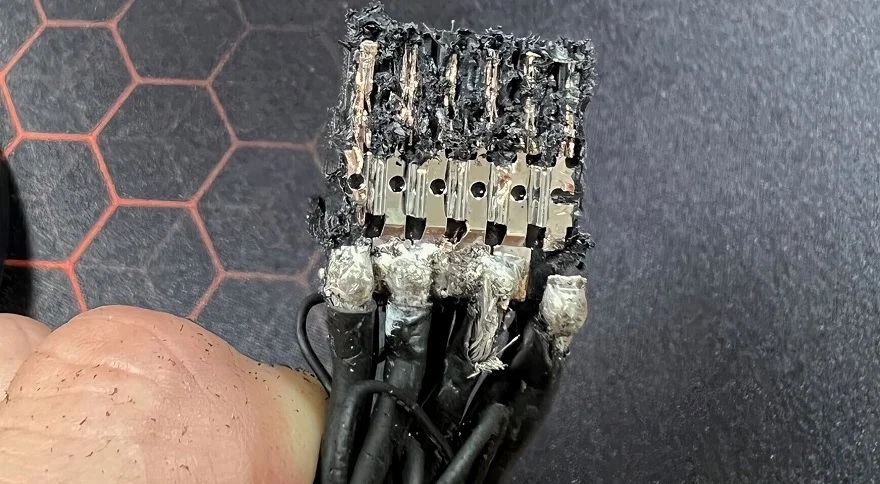

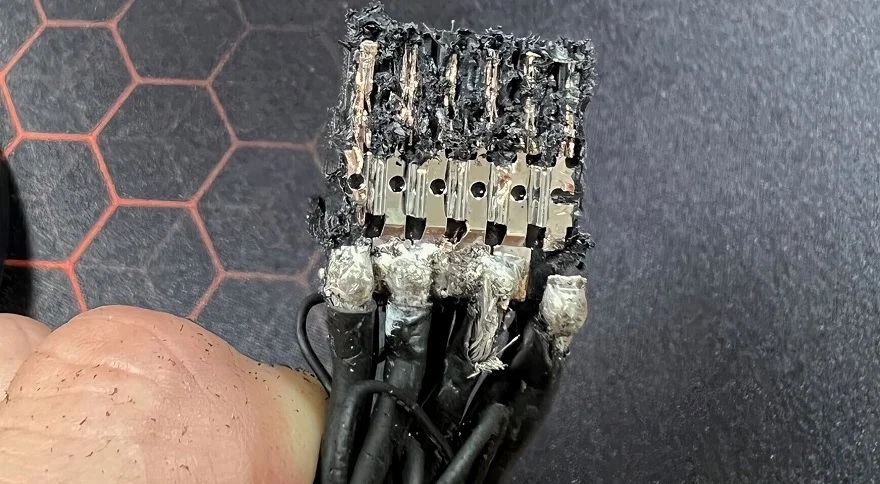

That is such a bad way of putting it and absolving Nvidia of all the blame... If you design a car that requires user to evrrytime solve a puzzle before brakes can be applied... When the user fails to apply breaks... Do you term it as user error? Or is it because of the poor design?GamersNexus's and Nvidia's own study has shown that it is mostly due to user error i.e. not plugging the adapter all the way in the graphics card power input.

burntwingzZz

Innovator

Its not the user its early to market issue and design issue .new ATX 3.0 powersupply will have new connectors.

Why it is not the user issue .The new connector doesn't have enough depth so that it can seat in , maybe it worked when directly connected via 12VHPWR connector from new PSU .But nvidia hands out 3 to one 12VHPWR connector and keeps hanging within the case which acts as pulling force and unseats /unsettles the connector /cable over the time.

Why it is not the user issue .The new connector doesn't have enough depth so that it can seat in , maybe it worked when directly connected via 12VHPWR connector from new PSU .But nvidia hands out 3 to one 12VHPWR connector and keeps hanging within the case which acts as pulling force and unseats /unsettles the connector /cable over the time.

Last edited:

Pushing the connector properly is not a puzzle. Your comparison is out of measure. The connector/adapter design is not Nvidia's alone. It is from PCIe sig of which major manufacturers are membersThat is such a bad way of putting it and absolving Nvidia of all the blame... If you design a car that requires user to evrrytime solve a puzzle before brakes can be applied... When the user fails to apply breaks... Do you term it as user error? Or is it because of the poor design?

Last edited:

animishticomb

Forerunner

The problem is with the weight of 4 wires and connectors this one connector has to support and that too in a bent state. It is poor design. User has to plug it in fully that's a given but the design keeps putting more pressure on the socket everyday to fail. If you keep pulling a connector by adding weight it will become lose eventually.Pushing the connector properly properly is not a puzzle. Your comparison is out of measure

Nvidia took the easy way out and said its user error. Ofcourse they want all the money and no blame. End of the day we will just need for more cards to fail till then Nvidia can have its greeds fill

How did you come with the conclusion that weight of 4 wires is a problem? You can hate Nvidia for their greediness and I too for their ridiculous pricing every new gen but that's irrelevant to connector discussionThe problem is with the weight of 4 wires and connectors this one connector has to support and that too in a bent state. It is poor design. User has to plug it in fully that's a given but the design keeps putting more pressure on the socket everyday to fail. If you keep pulling a connector by adding weight it will become lose eventually.

Nvidia took the easy way out and said its user error. Ofcourse they want all the money and no blame. End of the day we will just need for more cards to fail till then Nvidia can have its greeds fill

Mann

Herald

Price may self correct just like AMD did for it's 7000 series CPU. The new AMD GPU's will take care of that.How did you come with the conclusion that weight of 4 wires is a problem? You can hate Nvidia for their greediness and I too for their ridiculous pricing every new gen but that's irrelevant to connector discussion

It's the early adopters problem, both the exorbitant price and the power adopter.

Who said bragging rights comes cheap or without problems

Sorry I didn't put it in better words. It is surely a bad design. Nvidia is at fault. I was just letting the people who want to buy the 40 series card know that they can rest assured that their card won't melt given they have properly inserted the connector and there's no serious bend to the cable.That is such a bad way of putting it and absolving Nvidia of all the blame... If you design a car that requires user to evrrytime solve a puzzle before brakes can be applied... When the user fails to apply breaks... Do you term it as user error? Or is it because of the poor design?

burntwingzZz

Innovator

How did you come with the conclusion that weight of 4 wires is a problem? You can hate Nvidia for their greediness and I too for their ridiculous pricing every new gen but that's irrelevant to connector discussion

PCI-SIG Leak Confirms Revised 12VHPWR Connector is Being Considered

A leaked document from the PCI-SIG has again hinted that a revised 12VHPWR adaptor (utilised in the Nvidia 4090) might be on the way!

www.eteknix.com

Where does the highlighted portion say weight of wires? They are increasing the shrowding which can only increase weight

PCI-SIG Leak Confirms Revised 12VHPWR Connector is Being Considered

A leaked document from the PCI-SIG has again hinted that a revised 12VHPWR adaptor (utilised in the Nvidia 4090) might be on the way!www.eteknix.com

View attachment 152622

burntwingzZz

Innovator

Off topic. Can you tell how much electricity bill and heat in your room you have observed in compared to previous card you where usingI have for more than a month now. I have used with Nvidia adapter and custom cables. No issues. I know 5 other 4090 users in India who don't have any issues as well

Actually I am coming from a 3090. Compared it to it 4090 is less hot(Nearly 15-20C lesser), fans runs quieter hence and overall perf per watt is better than 3090. So it's an improvement in my room. But wallet situation is pretty badOff topic. Can you tell how much electricity bill and heat in your room you have observed in compared to previous card you where using

Last edited:

Mann

Herald

The 4090 uses the same (if not more) power than the 3090. So temperature is less can only be explained by vsync. That will also explain it being quieter although the newer card has a different fan design(Fe).Actually I am coming from a 3090. Compared it to it 4090 is less hot(Nearly 15-20C lesser), fans runs quieter hence and overall perf per watt is better than 3090. So it's an improvement in my room

At same performance 4090 is lot more efficient than 3090 by consuming less power and hence lesser thermal dissipation. Most of it is coming from the process node benefit 8nm -> 4nm and some of it from the relatively large cooler of 4090. Yes, this also has allowed the absolute power limit to be pushed even higher(350W -> 450W) while operating cooler.The 4090 uses the same (if not more) power than the 3090. So temperature is less can only be explained by vsync. That will also explain it being quieter although the newer card has a different fan design(Fe).

I don't use vsync as it may introduce noticeable lag. But for games which exceed 120fps which is my OLED's max refresh rate I set the frame limiter to 120. For a game which is above 120fps on both cards with frames limited to 120fps 4090 is relatively more thermal efficient.

Below is a power/thermal comparison.

RTX 4090 Vs RTX 3090: We Benchmarked Both - Tech4Gamers

My RTX 4090 vs RTX 3090 guide will tell you all the differences between the two graphics cards by comparing them in all categories.

tech4gamers.com

tech4gamers.com

Looking at the thermals, the RTX 4090 is running at 65°C, and the RTX 3090 is running at 63°C. Overall, that’s a difference of about 3.1%, which is basically negligible.

After going through the specs of the two GPUs, we already knew that the RTX 4090 has a 100W higher TDP. So, at 98% usage, the graphics card is consuming 420.8W, which is about 14.8% more than the RTX 3090 as it is consuming 366.3W at 98% usage.

All in all, a ~90.6% performance upgrade at the cost of ~14.8% higher power consumption seems like a fair deal.

Last edited:

Mann

Herald

At same performance 4090 is lot more efficient than 3090 by consuming less power and hence lesser thermal dissipation.

I don't use vsync as it may introduce noticeable lag. But for games which exceed 120fps which is my OLED's max refresh rate I set the frame limiter to 120.

By the way do anyone know when's the review embargo lifting for 7900xt and 7900xtx ?HDMI 2.1 is a deal breaker for new monitor coming in 2023. may be 4090ti will have themif 7900XTX false short of the hype in compared to 4080 or 4090 ratio wise.

12 or 13 ...By the way do anyone know when's the review embargo lifting for 7900xt and 7900xtx ?

- Status

- Not open for further replies.