salman8506

Herald

From firsthand use the main difference i found is power consumption and the card runs very cool compared to 30 series cards....less heat = longer life...my card when capped to 4k 60 does it with varying power consumption from 100-202w....the same game with 3060ti chewed constant 180-200w while performing a bit worse.Why not get a 3080Ti at around 50-55k in the used market? Performance is not that much worse for a much lower price; the 4070 is definitely not a 55% performance incresee than the 3080Ti. But uderstandable if you are not open to buying used GPUs.

). Personally I'd still get the 3080Ti and invest the 25-30k difference for the next GPU, but you do you

). Personally I'd still get the 3080Ti and invest the 25-30k difference for the next GPU, but you do you

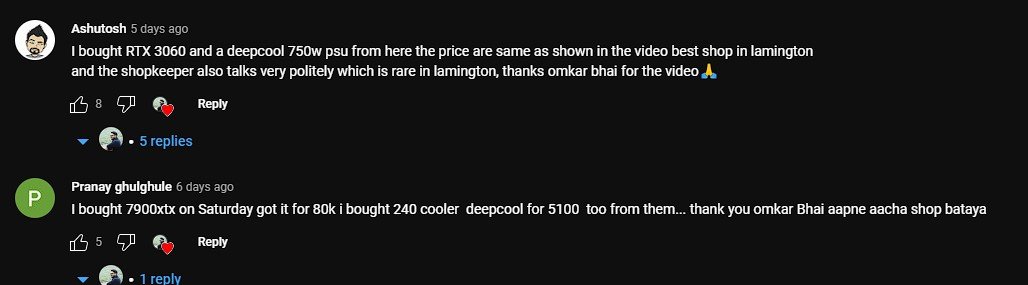

Really? This is at a retail shop on Lamington Road.

Really? This is at a retail shop on Lamington Road.

and yes games allocate more and forza horizon 5 kept warning of low memory when maxing at 1080p on 3060ti to the point the game started artifacting after 20-30mins of gameplay on 3060ti and rx6600 with 10-12gb it ran fine upto 4k maxed...when looking for a new card its always good to understand where tech is headed before investing.

and yes games allocate more and forza horizon 5 kept warning of low memory when maxing at 1080p on 3060ti to the point the game started artifacting after 20-30mins of gameplay on 3060ti and rx6600 with 10-12gb it ran fine upto 4k maxed...when looking for a new card its always good to understand where tech is headed before investing.